Document Information

- Document Tracking Number

-

dof-2008-1

- Document Version

-

7.0.1, 12 January 2018

- Build Version

-

7.0.1.8

- Source Information

-

2018-01-12, "." at commit c4a21c8bf741a32d4fe20776861d03baab74639a on branch "master" of repository core-specifications

- External Reference

-

DOF Protocol Specification, OpenDOF TSC, [dof-2008-1] (7.0.1, 12 January 2018)

This document is managed by the Technical Steering Committee of the OpenDOF Project, Inc.. Contact the committee using the following information.

Contact Information

- Post Mail

-

OpenDOF Project, Inc.

Technical Steering Committee

3855 SW 153rd Drive

Beaverton, OR 97003 - Telephone

-

1-503-619-4114

- Fax

-

1-503-644-6708

Copyright

Copyright © 2008-2018 Panasonic Corporation, Bryant Eastham

Patents

Contributors to the OpenDOF Project make a non-enforcement pledge related to any patent required to implement the protocols described in this document. For more information see https://opendof.org/pledge.

License

1. About This Document

This document contains information about the DOF Protocol Stack. This is critical information for anyone who needs to work with the protocols themselves, and contains useful background information for those interested in the architecture and inner workings of DOF systems.

This information is highly technical. This document serves as a specification and reference against which someone could implement networked software that interoperated with DOF products without using existing libraries.

1.1. Audience

The primary audience of this document is people implementing libraries that use DOF protocols. Others that are concerned about how DOF protocols work on a network at a very low level can also benefit from this document.

Readers should be familiar with technical protocol documentation. This document is similar to an 'RFC' for DOF protocols, and familiarity with the language used in these types of documents is helpful.

This document is not required reading for those who need to use existing DOF libraries, although system designers may benefit from an understanding of this information. In particular, the information related to security and connections can help system designers to better understand how DOF systems work.

1.2. Authority

This document, in its English form, is the authoritative document for DOF protocols. Due to the detailed nature of protocol design, it may be difficult for a reader to implement these protocols correctly based on a translated document. When questions arise, the English version is authoritative.

All implementations that claim to be DOF compliant must satisfy the requirements set forth in this document.

This document is managed by the Technical Steering Committee of the OpenDOF Project, Inc., referred to as 'ODP-TSC'. The inside cover of this document contains contact information for the Technical Steering Committee.

The web site for the Technical Steering Committee is https://opendof.org/tsc.

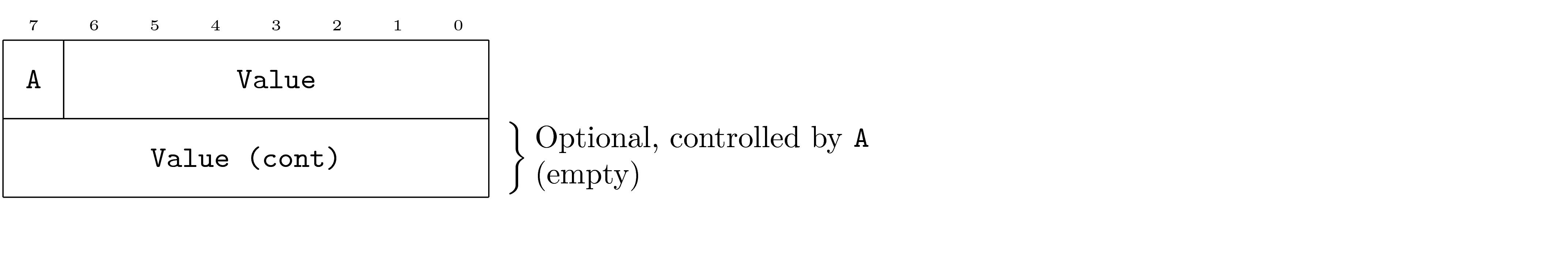

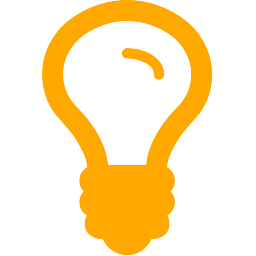

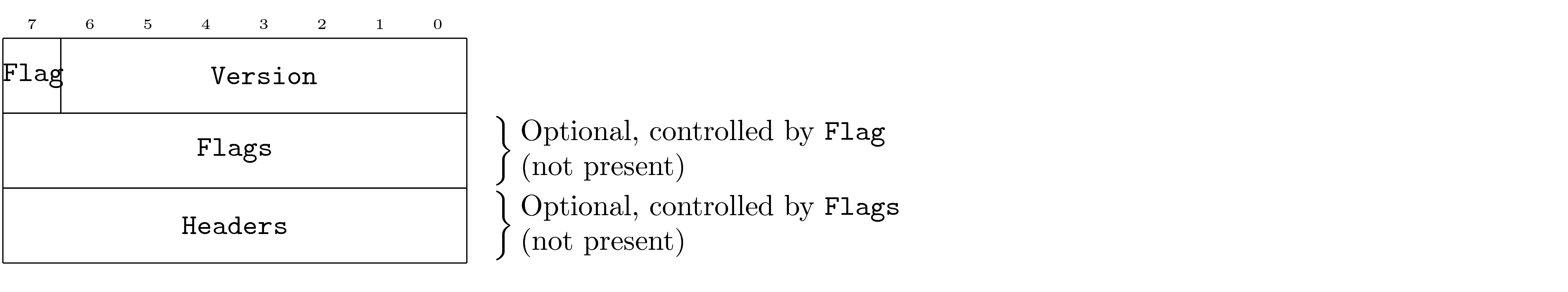

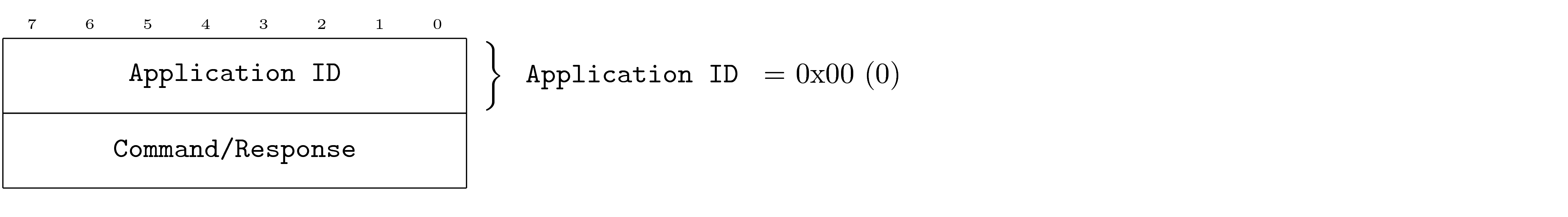

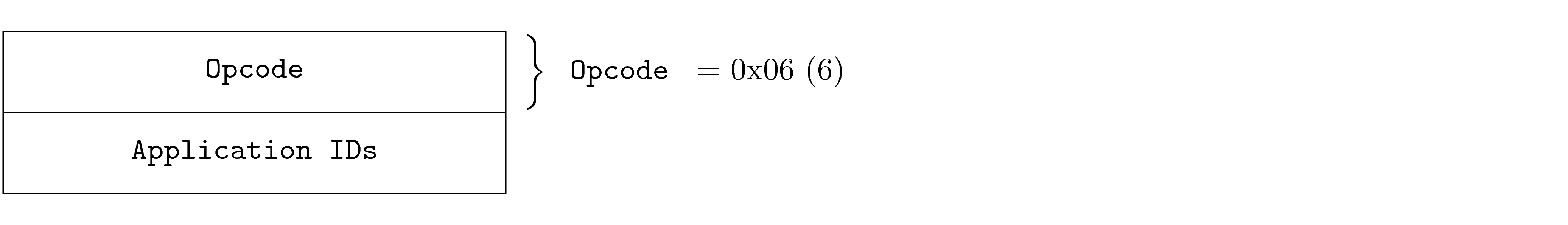

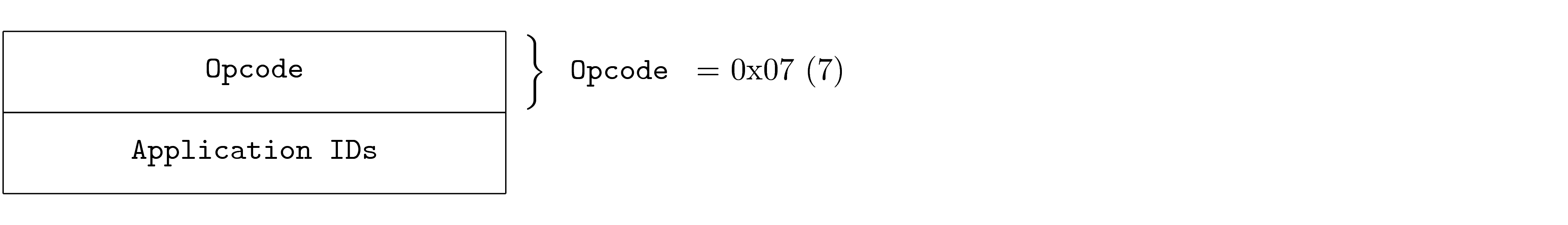

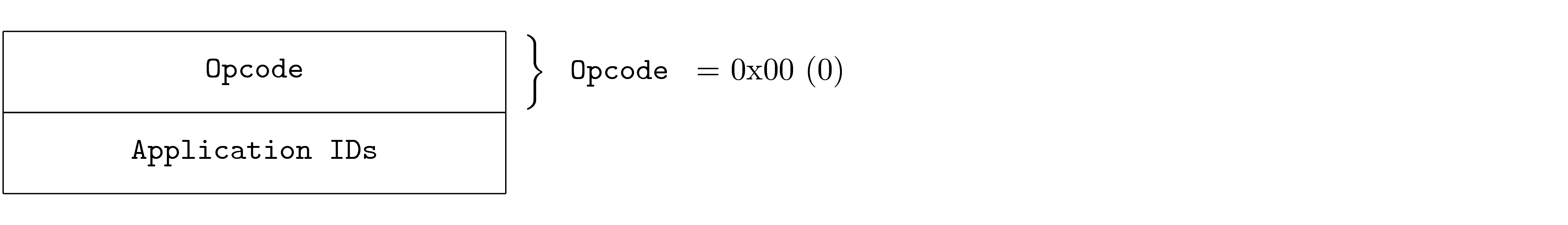

1.3. PDU Definitions

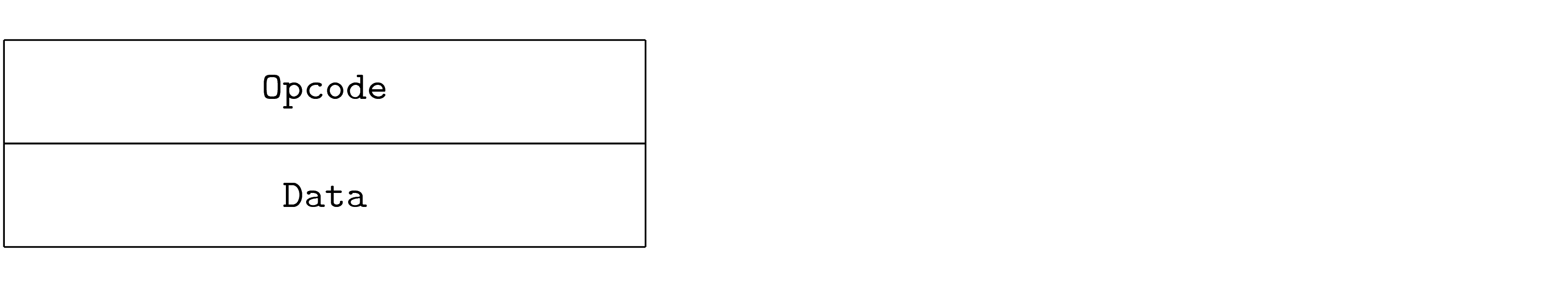

This document deals with the transmission of information on a network. In order to describe this, diagrams show different fields, their network order, and positioning.

All DOF protocol documentation uses a similar diagramming style, discussed in this section. The word 'PDU', which stands for 'Protocol Data Unit', indicates these diagrams.

1.3.1. Context

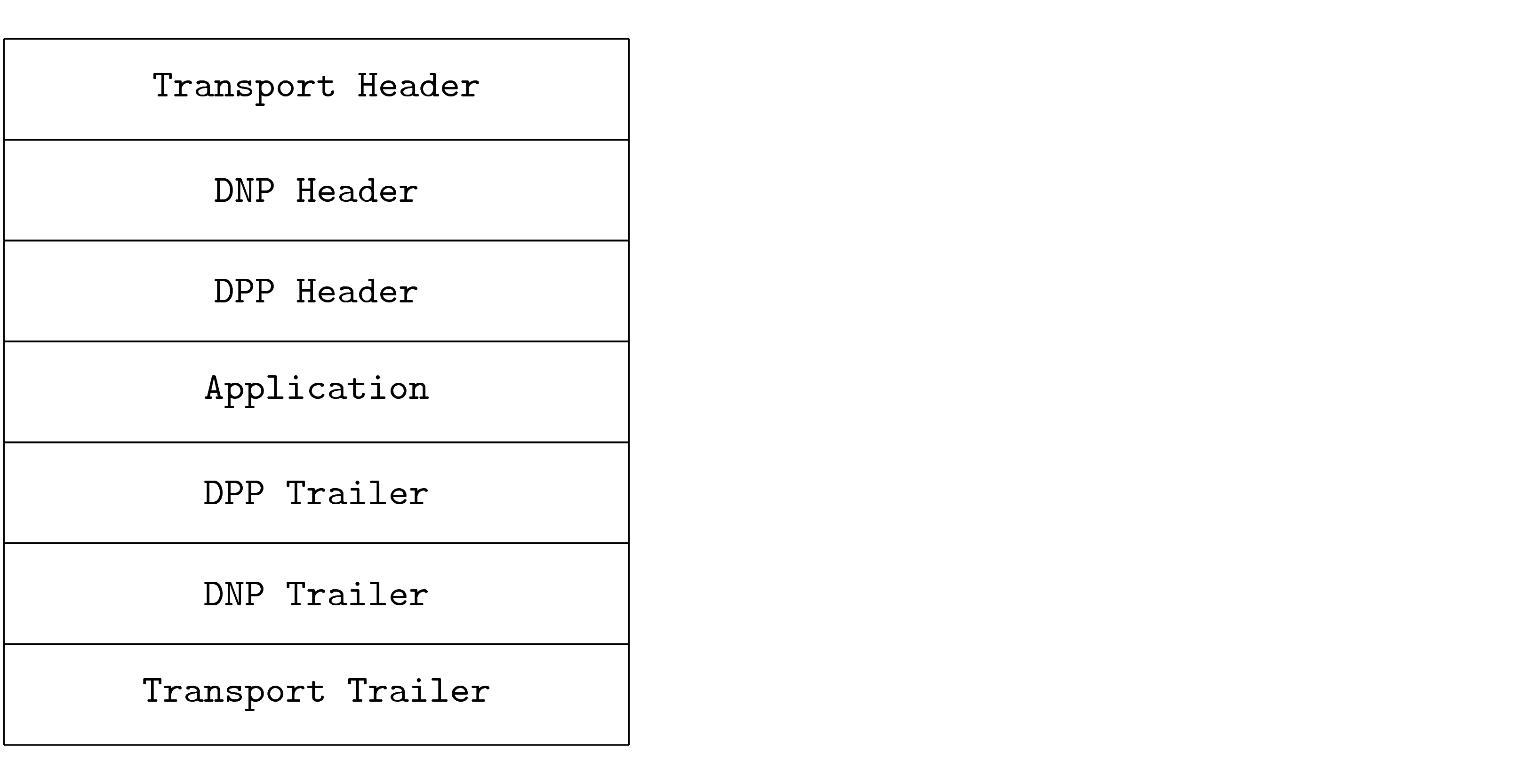

Each PDU represents information sent on the network in some context. This usually means that there is information sent 'surrounding' the PDU itself. This information provides the 'context' for the PDU.

For example, the context for the DOF Protocol Stack itself is a network transport. This could be UDP/IP, TCP/IP, or any other transport. The transport will require certain headers and trailers, but they are not shown in each DOF Protocol Stack PDU. In this example the transport provide the context of the DOF Protocol Stack PDU.

In a similar way, the DOF Protocol Stack defines the context for the other DOF protocols. The Object Access Protocol, for example, can use the DOF Protocol Stack for its context. Just as the transport headers are not shown for the DOF Protocol Stack, the DOF Protocol Stack headers are not shown for the Object Access Protocol.

Understanding the context requires understanding the relative position of the different layers in the protocol stack. Each PDU defines the ordering of all of its parts, and may indicate that it is itself an instance of some other general PDU format. This means that it is always possible to start at a high-level PDU definition and understand it completely, but not always possible to identify everywhere that a particular PDU may be used (as a part of some other higher-level PDU).

Each protocol specification defines its specific contexts. The context definition includes a description of the underlying stack layer (or the containing layer), and defines the information that must be available from that layer (for reception) or passed to that layer (for transmission).

As an example, the context of the DOF Network Protocol is a transport. The transport has a requirement to provide the addresses of nodes, and so part of the context for the transport is the sender address (for reception) or the target address (for transmission). Any transport that will work with the DOF Protocol Stack must meet the context requirements.

1.3.2. Generalization

Many times a particular PDU will be a specific type of a more general PDU. In these cases, the PDU will indicate that it is an 'instance' of the more general type.

1.3.3. Qualifications

Each PDU will have qualifications placed on its use. The general qualifications include security and transport requirements. The following terms identify these qualifications. Others may be present as well.

Transport Qualifications

There are three major transport categories for DOF protocols (see the DOF Protocol Specification, Transport Requirements). These are None, Lossy, and Lossless. There are also three addressing types: Unicast, Multicast, and Broadcast. PDUs indicate the ability to use each of these categories as follows:

Session: Combinations of None, Lossy (2-node, n-node), or Lossless. Addressing: Combinations of Unicast, Multicast, or Broadcast.

Security Qualifications

Each PDU categorizes security qualifications according to the major aspects of security (see the DOF Security Specification, Overview). These are Encryption, Data Integrity, Authentication, and Access Control. Encryption prevents viewing of the PDU, Data Integrity ensures that others do not modify the PDU, Authentication indicates knowledge of the nodes involved in the communication, and Access Control restricts those able to use the PDU.

Data Integrity is required according to the DOF Protocol Stack specification for all secure packets. Authentication is required whenever permissions are involved, or when it is important to know something about the relationship between the sender and receiver. Encryption is required if the contents of the packet contain information that must not be visible to potential attackers.

In addition, there is the case of unsecured communication. PDUs that do not require security will list 'Unsecured' as a possible choice. Finally, certain PDUs are required to be Unsecured, and are identified as such.

Each application PDU must specify its security requirements. If Access Control is required, the PDU will indicate the permissions required for the command and for any response. However, it is typical for all of the PDUs of a given protocol to share the same requirements, and so the protocol specification may define its security qualifications in a single section. In this case, the individual PDUs should refer back to this section to avoid confusion.

It is also typical for applications to require the security of the session. A PDU indicates this as 'session security', and is its own security qualification. If the PDU allows the 'None' session type then this implies that Unsecured is allowed, as session type 'None' cannot be secure.

For PDUs that refer to session security or a common security section, one of the following formats is used:

Security: See the section 'Security Qualifications'.

or

Security: Session.

The PDU uses the following identifications to specify the security qualifications for a PDU:

Unsecured: (description). Encryption: (description). Message authentication: (description). Permission (command): (description). Permission (response): (description).

For each of these, the (description) varies. There are many different combinations of values, as described here.

Unsecured can have the values 'Required', 'Allowed', 'Not Allowed'.

Encryption can have the values 'Required', 'Allowed', 'Not Allowed'.

Message authentication can have the values 'Required', 'Allowed', 'Not Allowed'.

The specific PDU determines the permissions required (for both command and response).

There are combinations that are not valid. The following table defines all allowed combinations if a combination does not appear in the table then it is invalid.

In general, security theory links Encryption and Data Integrity. If one is 'Required' then the other must at least be 'Allowed'. Encryption and Data Integrity are the opposite of Unsecured. If either is 'Required' then unsecured must be 'Not Allowed'. Again, note that since data integrity is a common requirement for all secure packets the PDUs do not list it separately.

| Unsecured / Encryption / Message Authentication | Description |

|---|---|

Required / Not Allowed / Not Allowed |

Indicates the PDU must be unsecured. |

Allowed / Allowed / Allowed |

Indicates there are no security requirements. |

Not Allowed / Allowed / Allowed |

Indicates the PDU must be either encrypted or authenticated. This is the lowest level of security possible for a PDU. |

Not Allowed / Required / Allowed |

Indicates that encryption is required, and data integrity is optional. |

Not Allowed / Allowed / Required |

Indicates that encrypted is optional, but authentication is required. |

Not Allowed / Required / Required |

Indicates both encryption and authentication are required. |

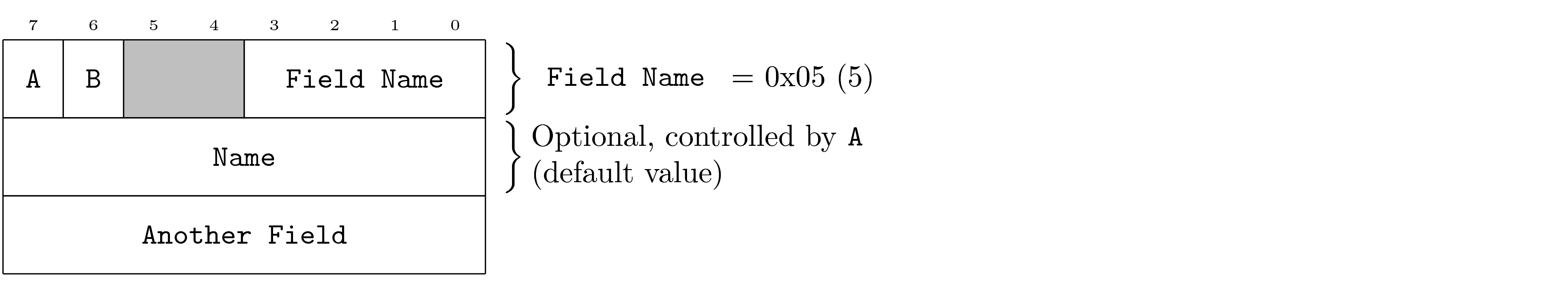

1.3.4. Fields

Each PDU shows a container, represented as a sequence of fields. The table fully describes each field.

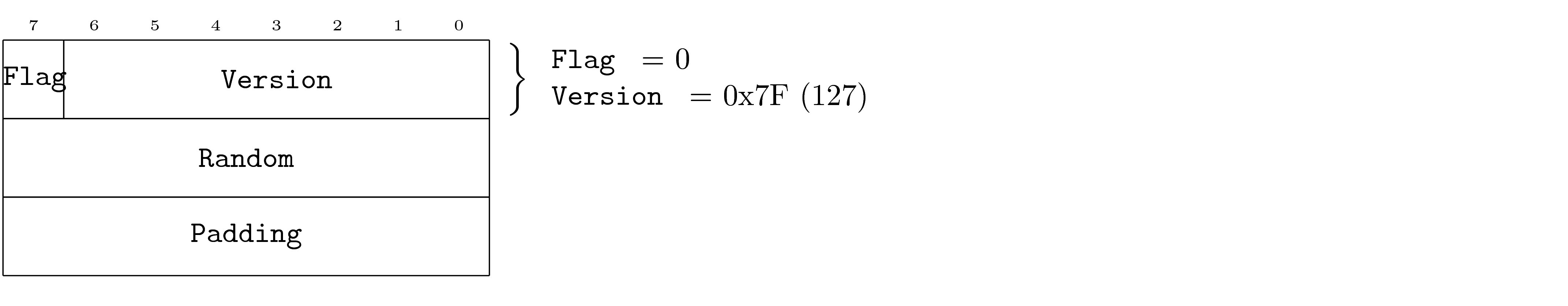

The following is an example of a PDU description.

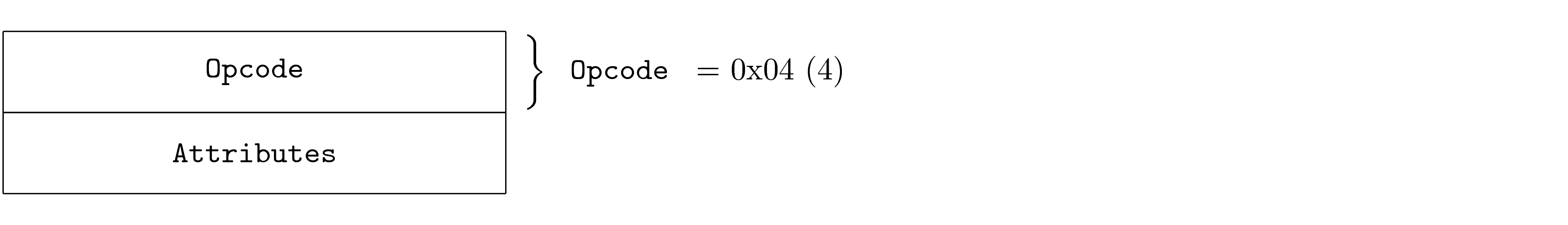

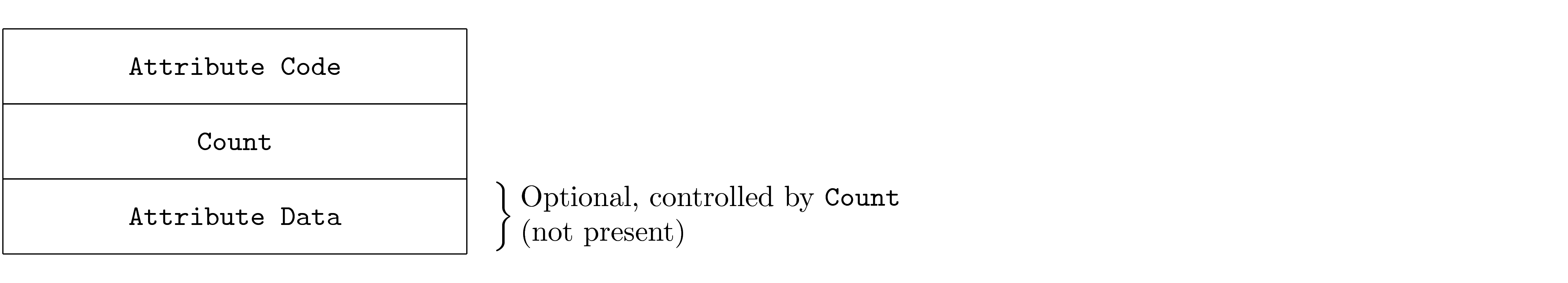

|

Example PDU |

The container represents the PDU. The diagram shows Fields first to last, left to right and top to bottom. This means that the first fields appear on the wire before the last fields. This is a general principle: things on the top and toward the left in the diagrams appear on the wire before things on the right and bottom. Note that the transport is free to use whatever ordering it must, and memory layout may different from the PDU definition. However, the transfer of a PDU from a program, over an arbitrary transport and into another program must maintain the perceived ordering between the two programs. PDU diagrams are always shown MSB first (most significant byte) and msb first (most significant bit) unless otherwise indicated.

Each field identifies its contents and provides information necessary to understand its use.

In the example above, the diagram shows the first six fields of the container.

Each field is named, with the exception of reserved fields which are indicated as being grayed out.

Field names use monospace text similar to that used in program listing.

The third field shown is indicated as reserved.

Reserved bits have special requirements described in the DOF Protocol Specification.

Several different field cases are typical, described in the following sections.

Typical Field

A typical field defines its name, its type, and its description.

As shown, this field is not optional, and so it must appear in the position indicated by the diagram.

The PDU uses Field Name throughout the related documentation to refer to the field.

The type of the field is a reference to some other defined PDU.

Otherwise, the PDU indicates the size of the field and any defined structure.

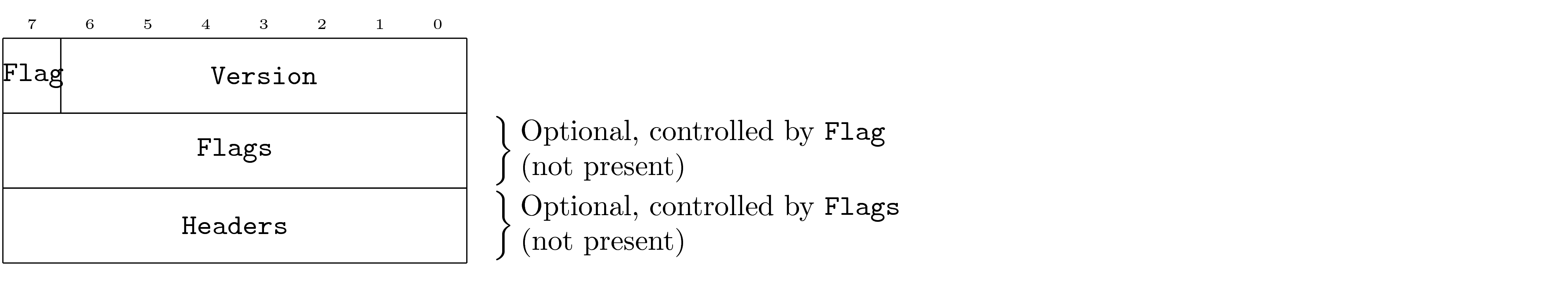

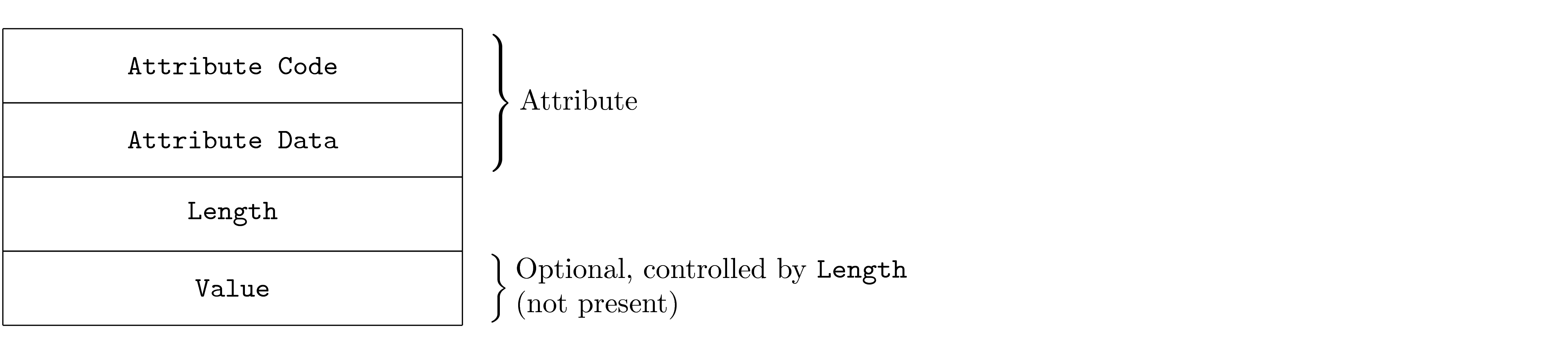

Optional Field

An optional field is similar to the typical case, but is indicated as optional in a note to the right. Each optional field will indicate what controls whether or not it is included as well as the default value that the field takes on when it is absent.

Fixed Values

Fields may have required values based on the specific PDU. This is common in the case of a PDU being an instance of another PDU, where the specific instance requires certain field values. In this case, it is not valid for the PDU to contain values other than that specified, if so the PDU is invalid.

The required value is shown to the right of the field as a comment.

Bit Fields

It is common for PDUs to leverage a single byte to store multiple fields (bit fields). The packing of multiple fields into a single byte is indicated as shown in the earlier example. This packing of bits may also leave space that can be indicated as reserved.

The PDU indicates reserved bits visually although the PDU does not individual name these fields.

Note that bit fields may specify default values just as other fields. However, single bit fields do not show their hexadecimal representation. In addition, bits without names that have required values may indicate their values in the place of the name.

1.4. Specifications, Notes and Warnings

This is a technical specification document. It is critical to understand how the document indicates specification items. There are also annotations for notes (implementation notes or other information that is worthy of extra notice) and warnings (pitfalls or extremely critical information). In addition, there are implementation notes, which point out optimizations that are not obvious.

Each type of callout ends either when another callout begins or when the text goes back to the normal indentation.

|

This is a note. The text is inset and there is a header to indicate the beginning of the text. |

|

This is an implementation note. The text is inset and there is a header to indicate the beginning of the text. |

|

This is a warning. The text is bold inset and there is a different header to indicate the beginning of the text. |

|

This is an example This is the text. |

Following the summary is descriptive information about the specification item. Each specification item has a unique number assigned.

1.5. Printing This Document

The format of this document is for two-sided printing on US Letter paper.

1.6. Comparing Documents

The easiest way to compare versions of this document is to compare the corresponding source. The source format is AsciiDoctor, which is a markup-style text format. This format can be compared using any text comparison tool, including those that work with source control documents. To facilitate determining the source for each document there is information about the commit, branch, and repository along with the document version information. In addition the following guidelines are followed to make things easy to compare:

-

The input files put each sentence on a separate line, with no hard line-wrapping.

-

To the extent possible, macros and templates are used to determine formatting and structure.

-

Graphics and diagrams also use text formats.

If all you have available is a compiled document (PDF or HTML) then there are tools available to compare them. This section summarizes some of these methods with their pros and cons.

1.6.1. Text Comparison

There are several free tools that will do comparisons of the text of a PDF. One of these is the xdocdiff plugin for WinMerge. The plugin extracts the text from the PDF (including some information like page numbers), and WinMerge then compares the text.

WinMerge is available from http://winmerge.org. The xdocdiff plugin is available from http://freemind.s57.xrea.com/xdocdiffPlugin/en/index.html.

1.6.2. Graphical Comparison

In order to compare formatting, or to see the changes in the context of the document, a graphical compare is required. There are several free tools, but each has limitations.

-

diff-pdf, available from https://github.com/vslavik/diff-pdf, shows an overlay of the matching pages in different colors.

-

DiffPDF, available fromhttp://www.qtrac.eu/diffpdf.html, shows side-by-side red-lined differences of matching pages.

Both of these tools suffer from their page-by-page comparison methods. This means that when context shifts because of additions or removals that they start sensing changes at each page boundary. DiffPDF has the ability to select comparison ranges, allowing the program to synchronize at the beginning of each section, but this requires manual configuration and can be difficult for large documents.

There are also commercial tools that do an excellent job at comparing PDF documents. The first is Adobe Acrobat (http://www.adobe.com/products/acrobat.html), which in later releases has a 'Compare Document' feature. The result of the comparison is an annotated PDF, and hovering the mouse over the change will show the details.

Another commercial tool is PDF Content Comparer (http://www.inetsoftware.de/products/pdf-content-comparer) from i-net Software. This tool does side-by-side comparison, but has an intuitive auto-synchronize that keeps similar content lined up even when additions or deletions have occurred.

2. Overview

This document discusses the protocols and theory behind the Distributed Object Framework, or DOF.

The descriptions of DOF protocols are based on the OSI protocol vocabulary. The target systems (possibly embedded microprocessors) on which these protocols are implemented necessitate some optimizations and other modifications to that standard.

This document discusses the common aspects of the DOF Protocol Stack. It does not cover the details of the various DOF application protocols. Application protocols each have separate specification documents with the exception of the DOF Session Protocol (DSP) which is covered in this document.

There are three main categories of DOF protocols. The first are application protocols. These protocols sit at the top of the protocol stack, and are the protocols that most engineers think of when they think of protocols at all. Examples of standard application protocols are FTP (File Transfer Protocol), HTTP (Hyper-Text Transfer Protocol), Telnet and SMTP (Simple Mail Transfer Protocol).

Five application protocols are critical to DOF systems:

-

DOF Session Protocol (DSP). This protocol allows connected nodes to negotiate options for the protocols that they will use (including which protocols they will use).

-

Object Access Protocol (OAP). This protocol allows a device to present Properties, Events, Methods, and Exceptions to clients.

-

Ticket Request Protocol (TRP). This protocol manages key distribution.

-

Ticket Exchange Protocol (TEP). This protocol manages security and access control.

-

Secure Group Management Protocol (SGMP). This protocol manages secure groups.

Application protocols require the services of other protocols to do their work. These other protocols form the remaining two main categories. The first are the typical stack layers:

-

DOF Presentation Protocol (DPP). This protocol handles encryption and defines which application protocol is being used.

-

DOF Network Protocol (DNP). This protocol allows for encapsulating other DOF Protocols over a variety of transports.

The final main category contains the encryption modes. These are both application protocols and can appear in other stack layers – they define the behavior the DOF presentation protocols when used securely, and they may contain application PDUs.

Of course there are other standard networking protocols that are used in DOF solutions. A primary example is TCP/IP.

2.1. The OSI Model

The OSI model for network protocols looks like this.

Each layer in the protocol relates to three different layers: the layer above it in the stack, the layer below it and (virtually) to the corresponding layer in the receiving stack.

While the OSI layering scheme is a powerful abstraction, it is not realistic to implement a fully abstracted stack on an embedded platform, and in fact, many full operating systems combine at least some of the layers. Even so, putting different functionality into 'layers' and defining a protocol between those layers can achieve the goals of modularity and clarity.

With this background, it is useful to identify the specific requirements that the network protocol has and assign them to layers.

2.2. Network Layering

In order to consolidate network features into manageable groups, designers group protocols by the functionality they provide. In many cases, these layers are logical, meaning that there is no externally defined API for a particular layer but its functionality represents a "meta-layer" that provides the functionality of many logical layers. This simplifies implementation and reduces required header size. Defined layers should have an associated API.

This document does not describe the standardized layers other than to identify that in DOF protocols several of the layers combined as described.

2.3. Control Authority

The DOF protocols are controlled by the Technical Steering Committee of the OpenDOF Project, Inc. (ODP-TSC).

The ODP-TSC manages the DOF Protocol Stack specification, including all its layer and application protocols. Prior approval is required for all changes to the protocols, or additions to the protocols.

2.4. Summary of Externally Assigned Numbers

DOF protocols use several assigned numbers from different standards bodies. This section summarizes these numbers. Assignments that are associated with a specific transport, like Internet Protocol (IP) are in the specification document associated with that transport.

2.4.1. SMI Private Enterprise Number

The IANA has assigned the decimal number 4561 for DOF protocols by IANA. See the IANA site for more information.

2.4.2. ETHERTYPE

IEEE has assigned the hex number 0x8876 is reserved for DOF protocols. See the IEEE site for more information.

2.4.3. PPP

The IANA has assigned the hex number 0x4027 for DOF protocols. See the IANA site for more information.

3. Transport Requirements

This document uses the word 'transport' to represent the underlying network that is used to communicate DOF protocols and the software that provides that network. A fundamental goal of DOF specifications is to be transport agnostic, meaning that almost any transport can be used. This section discusses common transport terms and DOF transport requirements. Anyone implementing an DOF transport should be very familiar with the terms and requirements of this section.

The function of a transport is to exchange information between nodes in a network. In doing this it will leverage transport addresses to identify the endpoints of the communication. Further, it will typically exchange information in a logical unit called a datagram.

Throughout this document, the word 'datagram' refers to this unit of information transfer. It is equivalent to a buffer of data with an associated length. Related to a datagram is a PDU, or Protocol Data Unit. This document uses the word 'PDU' to refer not just to the datagram, but also to the definition of the contents of the datagram. The term datagram appears extensively in network terminology, and any confusing uses of the term (those that do not refer to a unit of information transfer) will be qualified.

There are several terms related to transports defined in this section. Each of these terms appears in networking literature, and even the standard definitions can be confused. Throughout all DOF documentation the following definitions will be used, even if they are slightly different than accepted industry definitions. Further, many of these terms are important for transport implementations, but not critical to the definition of the DOF protocols. Terms that are used by the DOF protocols are defined as part of the 'context' at the end of this section. The context defines the information passed from the transport to the stack.

The following terms also appear later in this section if more discussion is required:

- Broadcast

-

A type of datagram where all nodes on a network are receivers. Broadcast datagrams are always associated with receiving servers. The term broadcast is also used in the context of a session, and in this case, it means that the datagram is sent to all nodes in the session. However, this second use of the term usually relates to application, rather than transport, behavior.

- Client

-

The node that takes the first action in establishing a session, or the node that sends a datagram to a server. Note that this definition refers to a particular action, not the behavior of an application as a whole. It is common for peer-to-peer systems to have nodes that are both client and server at the same time from an application perspective.

- Connection

-

A 2-node session monitored in such a way that notification will occur if the connection is 'broken' (meaning that datagrams can no longer be transferred). A session converts into a connection through monitoring, whether done by the operation system, library, or application. DOF protocols do not require connections, although they are typically provided (if requested) by most transports. In a layered protocol stack, once a session converts to a connection it appears as a connection by all higher levels of the stack. For example, a TCP transport provides a connection to the lowest DOF stack layer. Once a TCP connection is established (using a TCP server), DOF protocols do not need to do anything in order to maintain the connection. If the connection is broken (due to network failure or other reasons) then the DOF implementation will be notified (typically by the operating system or TCP implementation).

- Datagram Transport

-

A transport type that exchanges datagrams from node to node, preserving the boundaries of each datagram. DOF protocols are based on datagrams, and so it is natural to use a datagram transport.

- In-Order

-

A type of datagram delivery where the order in which datagrams are sent is guaranteed to be the order in which they are received. It is possible to have in-order and lossy, preserving order even if some datagrams are lost. Usually, in-order transports are lossless.

- Lossless

-

A lossless transport is one in which the nodes are guaranteed to receive each datagram sent (unless the transport fails).

- Lossy

-

A lossy transport is one in which the nodes are not guaranteed to receive each datagram sent.

- Multicast

-

A type of datagram that designates a set of nodes as receivers. Multicast datagrams are always associated with receiving servers.

- Orderless

-

A type of datagram delivery where the order in which datagrams are sent is not guaranteed to match the order in which they are received.

- Server

-

Part of the transport on a node that is able to send and receive datagrams, and establish transport sessions and connections. Its ability to send datagrams may require additional state (such as a destination transport address). Not all servers provide all of these capabilities, however. For example, a typical TCP server will not accept a datagram, cannot be used to send a datagram, and will only establish connections. Servers are associated with a specific transport address that is the target address for packets sent to the server and the address used to request sessions/connections from the server.

- Session

-

A relationship between a set of nodes where each node maintains state regarding the other. Sessions can exist at a variety of different levels (transport, security, application, etc.), and so the use of this term may be ambiguous. In this case the use should be clarified by indicating the type of session. For example, a UDP transport provides a server or session to the DOF implementation. State is provided that would indicate the nodes involved in the session, but maintaining any sense of continuity (providing a connection if one is required) is the responsibility of the DOF implementation in this case. In many cases the DOF implementation requires a session for certain behavior, relying on the shared state available to both nodes. Sessions typically refer a set of 2 nodes (the client and the server). However, it is possible to have n-node sessions. In this case all of the nodes attempt to maintain state. A lossless session will be able to track shared state exactly, where a lossy session may not be able to synchronize state exactly.

- Streaming Transport

-

A transport type that does not preserve datagram boundaries from node to node. DOF protocols are based on datagrams, and so in the case of streaming transport the specification must include additional information to preserve datagram boundaries.

- Unicast

-

A type of datagram where a single receiver is identified.

There is a difference between lossy/lossless and in-order/orderless. However, this document treats them as the same, and focuses on the lossy/lossless aspect. This means that lossy implies orderless, and lossless implies in-order throughout this document. Further, the property of streaming/datagram is of minor importance to the DOF implementation, and so it is not discussed in detail. The DOF Network Protocol (discussed later) is responsible for converting streaming transports back to datagram. Finally, with regards to state, there are three possibilities: none (no shared state, or state on only one node and not the others), session, and connection. DOF implementations rarely cares about the difference between session/connection, and so the focus will be between none and session.

Excluding the addressing types, these variations combine to form four different relationships: none/lossy, none/lossless, session/lossy, session/lossless. Of these four, the combination of none/lossless is ignored (it is virtually impossible to guarantee no datagram loss without some shared state). This leaves three primary categories of node relationships that are of concern.

Note that at the transport there may be many different combinations that can be considered equivalent to these three. This discussion relates to how DOF implementations view the transport, and what impact the different transport properties have on DOF protocol specifications.

A datagram is received from the transport in one of two ways: on a session (of any type, including through a server), or through a server with no session. In the first case the session alone may be enough to identify the transport addresses used by both the sender of the datagram and the receiver of the datagram (if the session is between two nodes, each with a transport address). Further, in this first case where the session is between 2 nodes only a reference to the session itself is required in order to send a datagram back to the original sender. In the case of no session or a multipoint session, the datagram itself is associated only with the server it was received on and the transport address of the sender. It is possible to send a datagram back to the sender, but doing so requires that the transport be given a server, any session, and the transport address of the destination.

In order to send a datagram on an DOF transport the transport must be given either a session, or a server along with a transport address (and possibly a session).

In a true distributed peer-to-peer system, almost every node in the system is both a server and a client. This means that most nodes have the ability to initiate or respond to a session. It is important to understand the typical implementation guidelines for these types of nodes, and to define the expected behavior of a server and client. This is one purpose of this section of this document.

DOF architecture imposes several requirements on clients and servers. These requirements are independent of transport, but do depend on the specifics of the session or connection provided. The following assumptions are made by the architecture. Failure to meet these assumptions will result in application failures, although depending on system design these may be expected and acceptable.

DOF implementations assume:

-

It is possible to send a response to the sender of a datagram (the client). Without this capability no responses could be received by the client. This is a very typical feature of all transports, and so is a safe assumption. DOF implementations further assume that for datagrams received by servers, a response may originate from a different server (transport address) than the server that received the datagram. This assumption is more complicated, and is discussed further later in this section.

-

If the server allows lossless sessions to be created, then that server can be identified by the transport address of a lossy response datagram. This assumption forms a link between the transport address of the lossless server and the transport address of the source of the lossy response datagram. This assumption only indicates that such a server may exist; it does not mandate that it must exist.

-

If the server allows lossless connections to be created, then that server can be identified by the transport address of a lossy command (non-response) datagram. This is the same assumption as above but in the opposite direction. This assumption also only makes sense in a peer to peer sense, because it presumes a server on the (original) client.

If possible, these assumptions should be satisfied by all transport implementations. The following sections go into further detail on the ways in which DOF implementations use the transport. These assumptions form the basis for how DOF implementations remain transport independent at the application layer.

3.1. Typical Session and Server Types

The discussion above indicates several properties that are associated with transports, clients, and servers. Out of the many different combinations of properties, there are three types that are so common that they are assumed to exist. These three types were introduced above.

3.1.1. Session/Lossless

This typical combination assumes that a server was used to create the session, and that from that point the session exchanges datagrams that are lossless and in-order (assuming that the two are related). Note that it is common for this combination to also provide a streaming session, but that DOF protocols do not differentiate streaming/datagram for this combination: it may be either. It is typical that this type of session will include only 2 nodes, but it is possible to have n-node sessions that are lossless.

DOF protocols make the following assumptions about these types of sessions and the servers that establish them:

-

The server type is session, although it may be connection (connection is never required). The transport will indicate the creation of the session to the DOF implementation, and it will create associated state. The DOF implementation will manage this state until (in the case of a connection) the transport indicates that the connection is terminated or that fact is determined by higher protocol layers.

-

All communication through the server is associated with a session. No datagram will be received (by the DOF implementation) that is not associated with a previously created session.

-

The session and datagram contains enough information for a response or new command to be sent to the sender of a datagram. In the typical case of a 2-node session then only the session is required.

-

Only the unicast address type is allowed for both nodes in a 2-node session. In n-node sessions the other address types (multicast/broadcast) are allowed, although broadcast is limited to the nodes in the session. The session or connection established uniquely identifies a single application on each node.

-

Since a session or connection must be created before a datagram is exchanged, the transport must provide a notification method for session creation. Further, the application must explicitly accept the new session in order to correctly track the state that it must maintain. Once created, the session can exchange a datagram. A received datagram is always provided in the context of a session. The session may be streaming, in which case the DOF Network Protocol will provide framing to preserve the datagram boundaries.

Lossless sessions are almost always associated with streaming, and so this category is often called 'streaming' servers. However, it is actually the session and lossless aspects of the sessions, along with the number of nodes in the session, that is the most critical for DOF operation.

3.1.2. None/Lossy

This typical combination assumes that no session is created. This means that one node maintains state about the other (the client), and that the other node utilizes a server that receives datagrams. The lack of shared state almost always indicates a lossy relationship.

Note that the lack of shared state (even though one node is likely maintaining state) means that no session exists. This lack of session means that the server treats each individual datagram as unrelated (although datagram relationships may be reintroduced due to different protocol layers).

DOF implementations make the following assumptions about these relationships:

-

The server does not create a session, it only accepts datagrams.

-

All three addressing types (unicast, multicast, and broadcast) are possible with these servers.

-

Since no session is created, the transport merely provides a notification method for the arrival of a datagram. Each datagram has identical framing to when it was sent.

-

Each datagram that arrives is tagged by the transport with enough information that a corresponding datagram can be sent back to the sender by using a server and transport address.

-

Each datagram that arrives is tagged by the transport with the sender’s address, allowing other clients using the same transport to send a datagram to the sender (this is the definition of 'discovering' a transport address).

Because of their association with a datagram, this category is often called 'datagram' servers. However, it is actually the lack of session and lossy nature of the server that is the most critical for DOF operation (along with the capability of multicast and broadcast).

3.1.3. Session/Lossy

This typical combination assumes that a session is created, but that datagrams may be lost. All nodes maintain state about the session. It is very typical for an implementation to create this combination from the none/lossy combination just described through the use of different protocol layer functions. Note that the client likely already maintains state, and so only the server needs to be told to keep track of the particular client. Note that some sort of session identification is likely required as well, provided by different protocol layers on top of the transport. This identification relates different datagrams to each other, as well as to the session information.

DOF implementations make the following assumptions about these relationships:

-

The server itself may not create a session, and may only accepts datagrams. The DOF implementation creates the session if required and not provided.

-

Any session created by the server is automatically destroyed when no longer used. The DOF implementation does not manage the session in this case.

-

All three addressing types (unicast, multicast, and broadcast) are possible with these sessions. The session established uniquely identifies a single application on each node.

-

The transport provides a notification for the creation of a new session (if handled by the transport). Otherwise the session is managed by the DOF implementation.

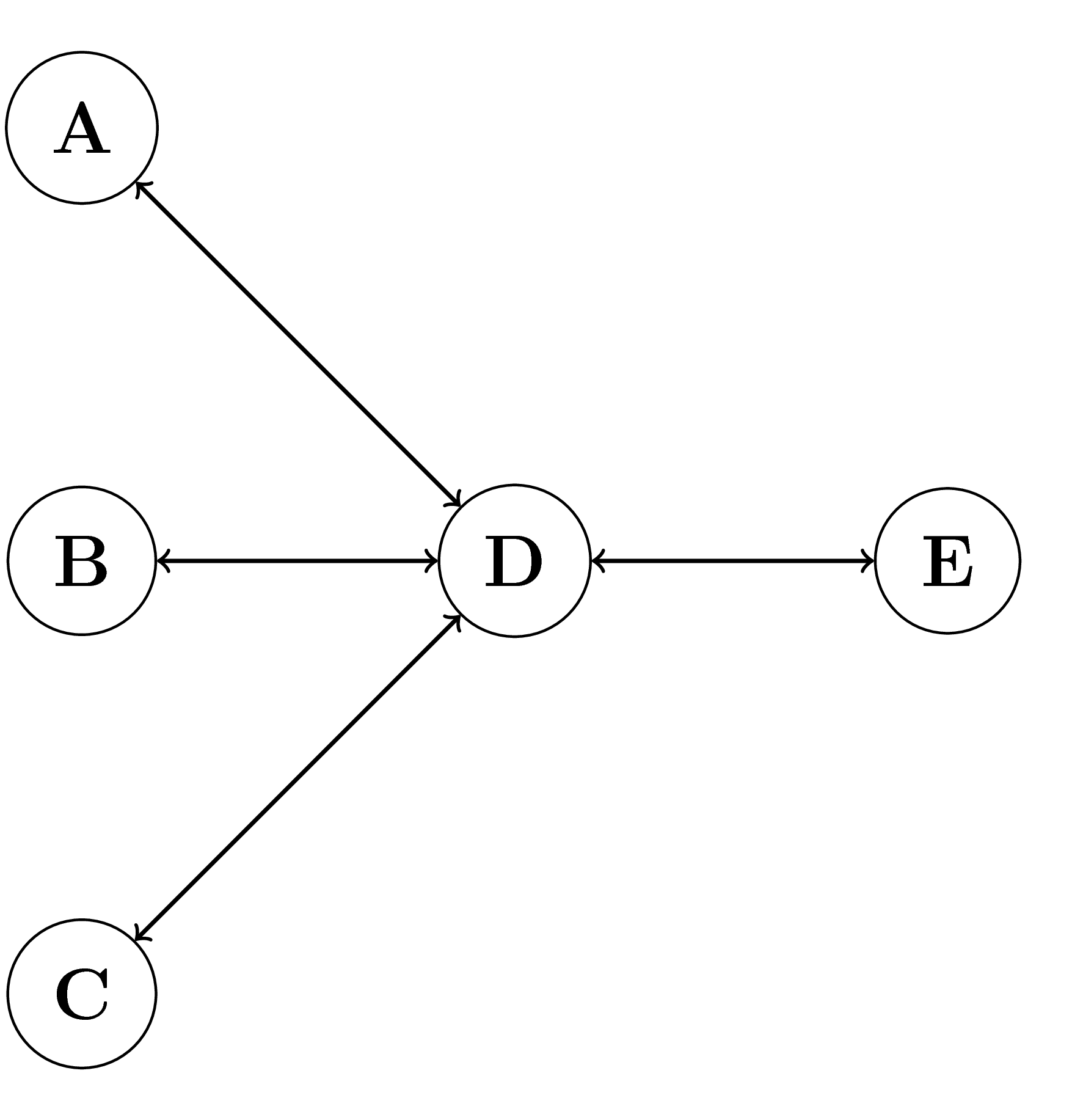

3.2. General Transport Properties

The general function of the transport is to provide a datagram to the DOF implementation and accept a datagram from the implementation that will be sent on the network. Each datagram is either in the context of a node (to be sent to a server), a session, or a server (to be sent to a node).

Servers and the sessions that they create are generally bundled into applications, with each application running on a node being associated with a unique set of servers (and associated transport addresses). The assumptions introduced earlier in this section are meant to allow discovery of the relationships between the servers associated with a single application.

Relating to this association, the overall assumption is that there is a relationship between the type of server and the associated transport addresses. From the perspective of a node, knowing something about the datagram properties discussed above allows for mapping from one server type to another. As DOF implementations deal fundamentally with two types of servers (lossy, and those that create lossless sessions), this means that there are generally two servers that must be related.

Each transport identifies the format and definition of its transport addresses. This information is opaque to the DOF implementation. Further, each transport has an associated Maximum Transmission Unit, or MTU. This represents the maximum datagram size that can be transmitted using the transport. In general there is a relationship between datagram size and network efficiency. This relationship is not exposed to the DOF implementation, other than a recognition that as datagram size approaches the MTU that efficiency likely drops.

DOF implementations require that the following properties are associated with each datagram, and they must be provided by the transport implementation:

-

The source transport address. For 2-node sessions, this is associated with the session itself. For servers and n-node sessions it is likely associated directly with the datagram.

-

The datagram data and size.

-

The source transport address' type. This will always correspond to a unicast address.

-

The target transport address' type. This is related to the server in most cases, otherwise with the session itself.

-

The properties of the server/session that received the datagram:

-

Lossy/Lossless (corresponding to Orderless/In-order).

-

Streaming/Datagram.

-

None/2-node Session/n-node Session.

3.2.1. Impact of Security on Datagram Properties

As mentioned earlier, it is often difficult for an application or transport implementation to accurately determine the target transport addressing that was used on any particular datagram. Even in the case of sessions that should be point to point, the possibility of packet injection means that the 'real' source of a datagram is suspect. In the case of lossy servers it may not be possible to determine if the client really used transport unicast, multicast, or broadcast.

This can cause problems for DOF implementations, since application behavior relies on the specific property values associated with the datagram.

In general, this means that in the absence of security that the client and server must do their best to determine the actual datagram properties, but that they must realize that the information cannot be fully trusted.

However, once a security layer has been added then the properties must be trusted. This means that the source and destination application addressing (not transport addressing) is verified, that the datagram data and size is verified, and that the transport address type (unicast, multicast, or broadcast) is verified. When the security protocol cannot validate any of these properties then it must modify the datagram properties to represent what is possible, rather than trust the transport-determined property value.

For example, if a session (including the DOF protocol layers) can guarantee that a particular datagram arrived from a particular sender and can only be received by a single node, then it can modify the property to indicate unicast addressing. If, however, the security protocol cannot guarantee a single receiver, then it must modify the property to indicate multicast or broadcast as appropriate. Applications that base behavior on these datagram properties for a secure datagram must use the property values as modified by the security protocol.

|

Secure datagram transport properties must represent only secure information.

dof-2008-1-spec-1This allows applications to determine the level of trust for all information that they receive, even from the transport layer. Other information that is untrusted may be presented, but should not indicate that it is secure (unless the transport knows it is secure). For example, transport addressing is typically not known to be secure, and so should not be trusted unless the transport indicates that they are secure. |

3.3. Lossless Requirements

As discussed above, lossless servers are always associated with a session or connection. The server must indicate to the implementation that a new session has been created, and the implementation must explicitly accept the new session.

The following requirements apply to each lossless session accepted by the implementation.

|

Sessions must be monitored and must guard against improper clients.

dof-2008-1-spec-2A session with a non-communicative or ill-defined client must not be allowed to exist for too long. There are no explicit timeouts defined in this case, and so the implementation is free to impose whatever requirements it will. The implementation can use the features of the DOF Presentation Protocol to ensure that a session remains valid. |

Sessions are always initiated by clients. The client must determine the appropriate target transport address for the server. For lossless sessions, the client must maintain the session, and is primarily responsible for ending the session. If the client determines that the session is no longer required then it should use the transport to close the session in a way that the server session is also closed. This should involve correctly closing the session at each layer of the protocol stack, beginning with the application layer and ending with the transport layer.

However, it is possible for the server to terminate a session as well. In this case the client must remove the state associated with the session at each layer of the stack.

3.3.1. Traffic Symmetry

The DPS frequently runs over lossless transports like TCP/IP. These transports are typically optimized for lossless bi-directional data, and can have performance issues when used to transmit uni-directional, bursty datagrams. Unfortunately, many small-footprint stacks maximize this performance problem by limiting their implementations. To minimize problems it is best that each datagram be acknowledged before the next can be sent, or at least that the datagrams flowing in each direction are roughly equal.

There are two common optimizations specific to TCP/IP that can cause performance problems in these situations. The first is the Nagle Algorithm, which can slow the sending of data assuming that it is desirable to combine many small datagrams into fewer larger datagrams. The second is the introduction of a delay on the ACK assuming that it is better to piggyback the ACK on a data datagram than to send it with no data. The combination of these two optimizations can lead to poor performance of the DPS on TCP/IP.

There are typically options to disable the Nagle Algorithm (TCPNODELAY). Because this affects outgoing traffic there is little more that can be done to optimize performance other than disabling it.

The second optimization, that of delaying an ACK until data exists to "piggyback" it on, is typically not configurable. The answer to this performance issue is to ensure that the protocol in question is symmetric - meaning that each datagram sent in one direction elicits a response datagram going the other direction.

Unfortunately, a typical use case is to establish a session, establish state, and then wait for responses. In this case, little or no traffic moves in one direction and many periodic datagrams move in the other direction. This is the worst situation for both the Nagle Algorithm and the ACK-delay problem. The Nagle Algorithm delays the commands, combining them into larger datagrams. The ACK messages delay because no datagrams are going in the other direction. This is standard problem with asymmetric traffic in TCP/IP.

The DPS provides the commands (through the Ping and Heartbeat commands) to allow for symmetry in the application protocols. This means that if an application protocol knows that it is in a non-symmetric situation and wants to even out the datagrams that it can use the DPP common commands. This behavior should depend on the transport, as it knows whether symmetry is beneficial.

3.4. Lossy Requirements

As discussed above, lossy can refer to both sessions (between nodes) and servers (between a node and a server). In the case of a server there is no indication of communication except for the arrival of a datagram. Any session creation is the responsibility of the DOF implementation. As discussed earlier, the client transport implementation should be able to map between these inbound datagram responses and an associated lossless server transport address.

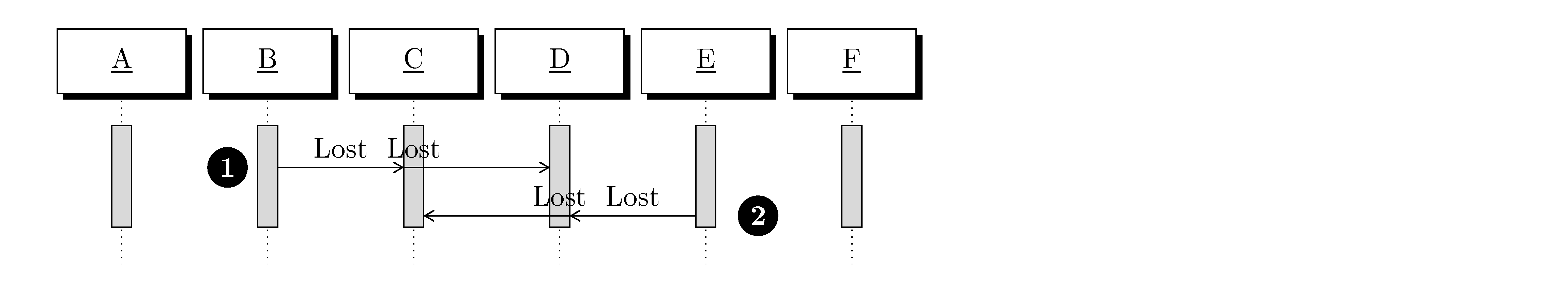

|

Datagram responses should be mappable to an associated lossless server transport address.

dof-2008-1-spec-3A general architecture principle discussed earlier is that a datagram response can be used to determine a corresponding lossless server transport address (if one exists). This means that the transport information from a datagram response should contain enough information to identify the transport address associated with a lossless server (on the responding server). |

|

Lossy commands should be mappable to an associated lossless server transport address.

dof-2008-1-spec-4An outbound lossy command should allow any corresponding lossless server transport address to be determined by the recipient. This allows any command to be used to identify the transport address of the client’s lossless server. |

3.5. Multicast Requirements

Servers may have configured transport address for transport multicast communications. In most cases, this transport address used by the DOF implementation should be standardized for the transport. This allows greater compatibility between systems. If a set of applications (a system) wants to operate in isolation then they may use a different multicast addressing, although this additional addressing should be registered with IANA (for IP) or other applicable standard body.

Even in the case of systems using different multicast addressing, the servers should still use the standardized transport addressing in addition to their system addressing.

|

Multicast servers should use registered, consistent transport addresses.

dof-2008-1-spec-5This requirement helps with interoperability of devices. |

3.6. Address Discovery

In order for a client to communicate with a server it must know the appropriate transport address. This information is typically obtained in one of three ways:

-

Static configuration. The client can be told explicitly where to connect. This method is always possible, but is usually inflexible and generally not recommended. If static configuration must be used (for example, in the case of WAN system where discovery is not possible), then naming services (like DNS) should be used. The use of a fixed transport address is strongly discouraged.

-

Discovery. Addresses can be discovered based on multicast discovery. This is more flexible than static configuration, but requires multicast to be enabled on the network and can only discover local servers. Discovery does offer the potential for minimal configuration.

-

Dynamic configuration. Many transports support dynamic configuration. For example, DHCP can be used on IP-based networks to configure DOF properties. Like discovery, dynamic configuration offers the potential for minimal configuration. The use of standard mechanisms to configure DOF properties (such as additional fields in a DHCP request) should be registered with the applicable standard body.

3.7. Transport Specifications

Each transport that can be used with DOF systems must have a specification document. The specification must outline how the transport requirements detailed here are satisfied. It must also define any standardized addressing and other properties.

The transport specification must indicate the following:

-

The format and meaning of its transport addressing.

-

The types of transport addressing that are supported (unicast, multicast, broadcast).

-

The types of servers and sessions that are possible (lossless, lossy, 2-node, n-node), with any associated MTU information.

-

The mapping that is possible between different server types based on transport addresses.

-

The different types of configuration that are possible, including the provisions for any dynamic configuration and how DOF properties can leverage dynamic configuration.

This information is required for determining the transport context information.

Up to this point the discussion of different transport address types has not been combined with the different types of transport configurations. Keep in mind the three types of transport combinations discussed: none/lossy, session/lossy, session/lossless. These can be combined with three different address types: unicast, multicast, and broadcast. However, only the 'none' type can utilize the different address types, with sessions always being associated with unicast addressing.

Each datagram used by DOF implementations can therefore be categorized by its requirements in three areas:

-

Whether a session is required, and the number of nodes that can be in the session.

-

Which addressing is allowable (unicast, multicast, or broadcast).

-

Whether the session must be lossless (implying in-order), or if lossy is allowed (implying orderless).

This can be summarized as the 'Session' requirements and 'Addressing' requirements. Session is one of: None, Lossy (2-node, n-node), or Lossless (2-node, n-node). Addressing is one of (Unicast, Multicast, Broadcast).

Each DOF PDU will indicate the session and addressing requirements that it has. In the case of a session the source of the session will be identified.

4. DOF Protocol Stack (DPS)

The DOF Protocol Stack (DPS) is the foundation for all DOF protocols. These protocols operate on a variety of platforms, including small devices with limited resources. Even though the small devices require optimization, the protocols follow the OSI model of layer separation as much as possible.

The DOF Protocol Stack also operates on a number of different transports. To the extent possible, the DPS and other DOF Protocols do not require specific information about the transport being used. For example, the protocols do not expose details of transport-layer logical addressing at higher levels. In the same way, implementations of the protocols maintain this same transport-agnostic view. Later sections of this document note specific transport requirements.

The lowest layer of the DPS is the DOF Network Protocol (DNP). This layer provides the minimum requirements in order for the DPS to be identified (including versioning), and provides the datagram length information on streaming transports. The DNP also implements some transport layer functionality, including a sense of addressing that can augment what the transport provides.

The session and presentation layers are combined in the DOF Protocol Stack as the DOF Presentation Protocol (DPP). DPP handles encryption and replay-attack prevention (functions of the presentation layer) through encryption modes of operation. It does not handle retries or network-layer acknowledgements. DPP does provide the foundation for commands and responses, including operation identification and loop detection.

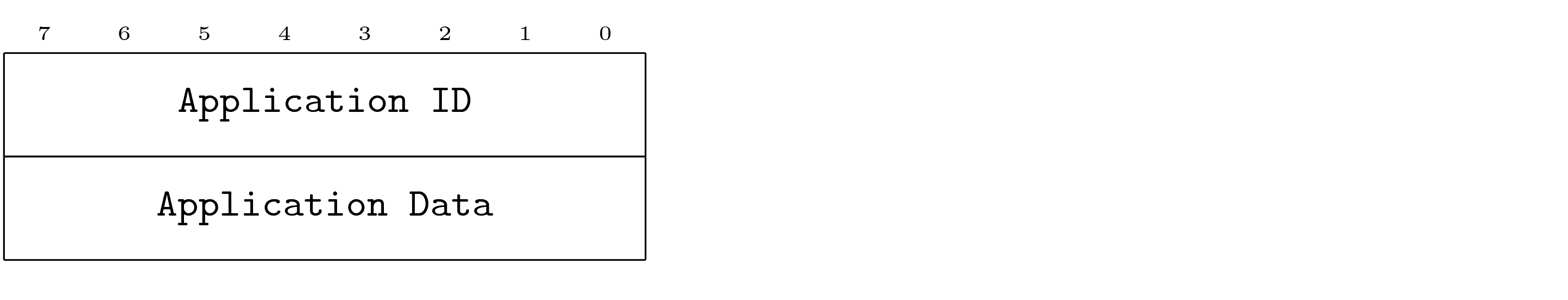

Finally, there is the DOF Application Layer. Other references discuss specific DOF application protocols, although some discussion common to all application protocols appears here.

4.1. General Principles

The following sections discuss general principles that govern the DPS and its associated application protocols. These topics relate generally to both DNP, DPP, and application layers, although the specifics may apply more to one than the other.

These principles are also not dependent on the version or layer used. This means that they apply to the stack as a whole, and that any application protocol can depend on the behavior.

4.1.1. Reserved Bits

Throughout the DPS and DOF application protocols, there are references to 'Reserved' bits. The following discussion applies to all of these fields.

This protocol specification defines the meaning of every bit that passes over the wire. In many cases, it does so by defining a field and then defining the range of values that can appear in that field. In other cases, it defines a 'bit field,' and in doing so leaves some bits as available. These bits may require a constant value, or the specification may indicate that they are Reserved.

In the absence of other requirements, the handling of these remaining bits would be ambiguous, which is not a good idea in protocol design. However, the possible future use of these bits is restricted because existing implementations will not understand any newly defined behavior.

Any future use must then:

-

Not change the 'format' of the PDU, as existing implementations would not understand how to read the PDU (or would read it incorrectly).

-

Not affect the meaning of the command or response in a way that the receiver must understand.

-

Only introduce optional behavior that does not change the format of the command or response.

Note that any changes that do not meet these requirements necessitate changing the protocol revision.

Handling these bits requires correct management. The following rule defines correct behavior.

|

Senders must set all RESERVED bits to zero.

dof-2008-1-spec-6The zero value ensures a known state (which will be the default) for any new behavior. |

|

Receivers must ignore all RESERVED bits.

dof-2008-1-spec-7This ensures that old implementations will ignore behavior defined in the future, although they will accept the PDU independent of the value. |

4.1.2. Timeouts

A general requirement of the DPS is that non-communicating or ill-behaved sessions should be detected and terminated/closed as quickly as possible. Ill-behaved sessions are particularly dangerous (from a security perspective) during stack establishment. A goal is to force negotiation as quickly as possible, without being so restrictive that slow network links cause problems.

|

In order to force negotiation quickly each layer in the protocol stack may define timeout behaviors. These timeouts are serial, not parallel. This means that a single timer for each session can enforce all timeouts. The behavior of the stack when a timeout occurs is uniform: the implementation terminates the session. Reaching each milestone in stack negotiation resets the timeout based on the requirements of the new stack layer or layer set. |

This general rule applies throughout negotiation and through the authentication phase. The single timer use does not continue into the application protocols, but may still be used (based on the transport requirements) to determine communication failures. These types of timeouts are dependent on the implementation.

4.1.3. Protocol Discovery

Different nodes in the same network may support different versions of DPS layers and different application protocol versions. A primary goal of DOF protocols is interoperability, and so it is important to be able to discover these different nodes and communicate with them if possible.

At the lowest layer (transport), there should be the ability to communicate with all nodes. This usually requires either multicast or broadcast. Specific system implementations may utilize non-standard addressing in order to localize communication. Independent of specific system requirements, DOF nodes should still listen on the standard addresses and ports defined for the transport in order to facilitate discovery by general nodes.

The ability to speak multiple versions of a protocol is generally associated with a gateway. However, it is possible that some nodes (clients or servers) may not function as a gateway, but may speak different protocol versions.

If these nodes simultaneously attempted to use all of the versions that they can, worst-case traffic would result. This is because they may be sending protocol versions that no other node understands, and so the traffic is wasted.

The same is true of a gateway. If gateway nodes simultaneously used all of their versions then the same worst-case traffic would result. It only makes sense for a gateway (or a multi-protocol server or client) to speak versions that others on the network are able to understand.

DOF protocols provide a method for discovering protocol versions in an optimized way. As a requirement, the nodes must be capable of using a multicast or broadcast transport. In general, the difference between 'node discovery' and protocol discovery is that in protocol discovery the goal is not to identify each node, but rather each protocol and protocol version. Whether there are 10 nodes speaking a version or 1,000 nodes is not important (that number can be determined later by using node discovery). This allows for some specific optimizations for protocol discovery.

As an example of the problem of multiple versions, consider the following. A company sells an DOF-based sensor product. The product implements specific versions of the DNP, DPP, and applications. The sensor only responds when queried. Years pass, and the customer has removed the original product that used the sensor, but has not removed the sensor. The customer installs a new product, which speaks different DNP and DPP versions.

How can the new product speak to the old sensor? Without aid (some sort of gateway) it cannot, unless the new product also speaks the older protocol versions. Assuming that it does not speak the older protocol version, then a gateway (a special node that speaks multiple protocol versions) is required. How can such a gateway discover which DNP and DPP versions are available on a network?

One solution, discussed above, would be to cycle through all possible versions, discovering nodes that use each combination. This is expensive in terms of network traffic. Another solution would be to have each node advertise itself on the network periodically, but this is also expensive in terms of network traffic that would be mostly redundant.

In order to solve this problem, DOF specifications define version zero of each protocol (and protocol layer) as a query protocol. Each node is required to support version zero, although it does not encapsulate normal PDUs. The version zero protocol for each layer follows a pattern that allows version discovery.

This means that all nodes will support at least two versions of each stack layer: version zero (for version discovery) and some other version (for PDU encapsulation). Implementations drop unrecognized versions.

|

Implements must drop PDUs that use unrecognized versions on lossy transports.

dof-2008-1-spec-8Unrecognized PDUs may arrive on lossy transports. This means that dropping PDUs using unrecognized versions on a lossy transport is safe. There is no indication on the receiving or sending node when this happens. |

Note that by supporting the query version (version 0) a node is only required to respond to queries. If a given node has no reason to discover other version information then it never needs to initiate a query.

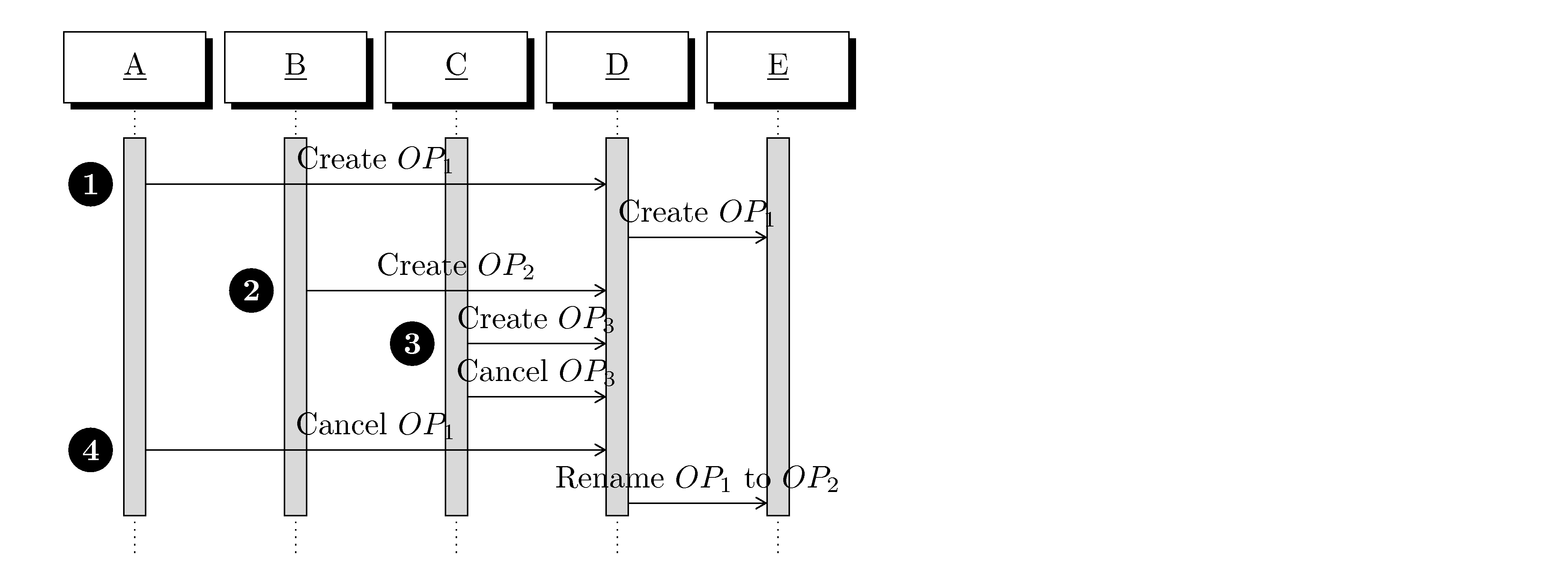

4.1.4. Protocol Negotiation

Different nodes in the same network may simultaneously be using different versions of DOF protocols, including the DNP, DPP and applications. This means that when two nodes communicate, they need to determine which versions they will use.

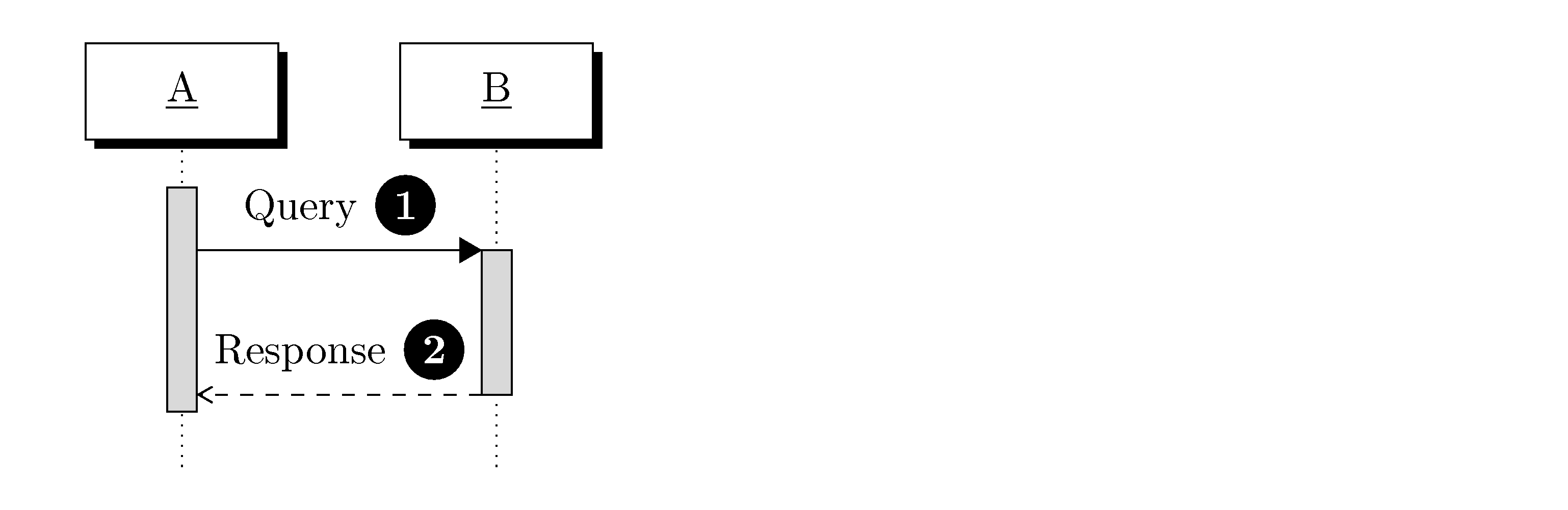

If the nodes are using a lossy session or server then the sender can either assert a version or use version discovery (unicast) to determine the versions spoken by the target. If the sender asserts a version that the receiver does not understand then the receiver silently drops the datagram.

When two nodes establish a lossless session, they have the benefit of both bi-directional non-broadcast communication and shared state. This makes the negotiation of protocol versions possible. It is also possible that a lossless session spans local networks where protocol discovery (which uses a multicast or unicast datagram) is not possible. This means that protocol negotiation is required for the two nodes to communicate.

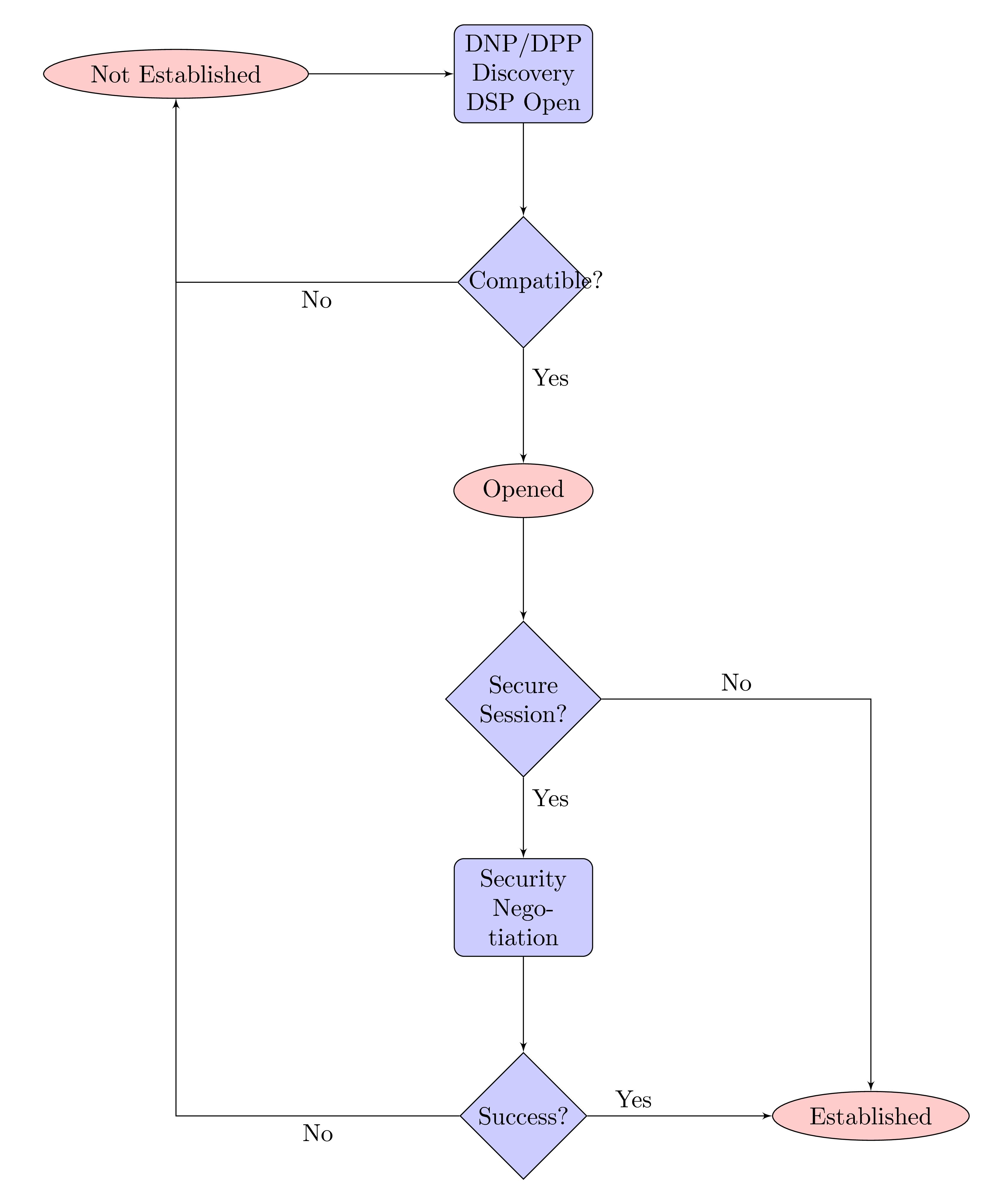

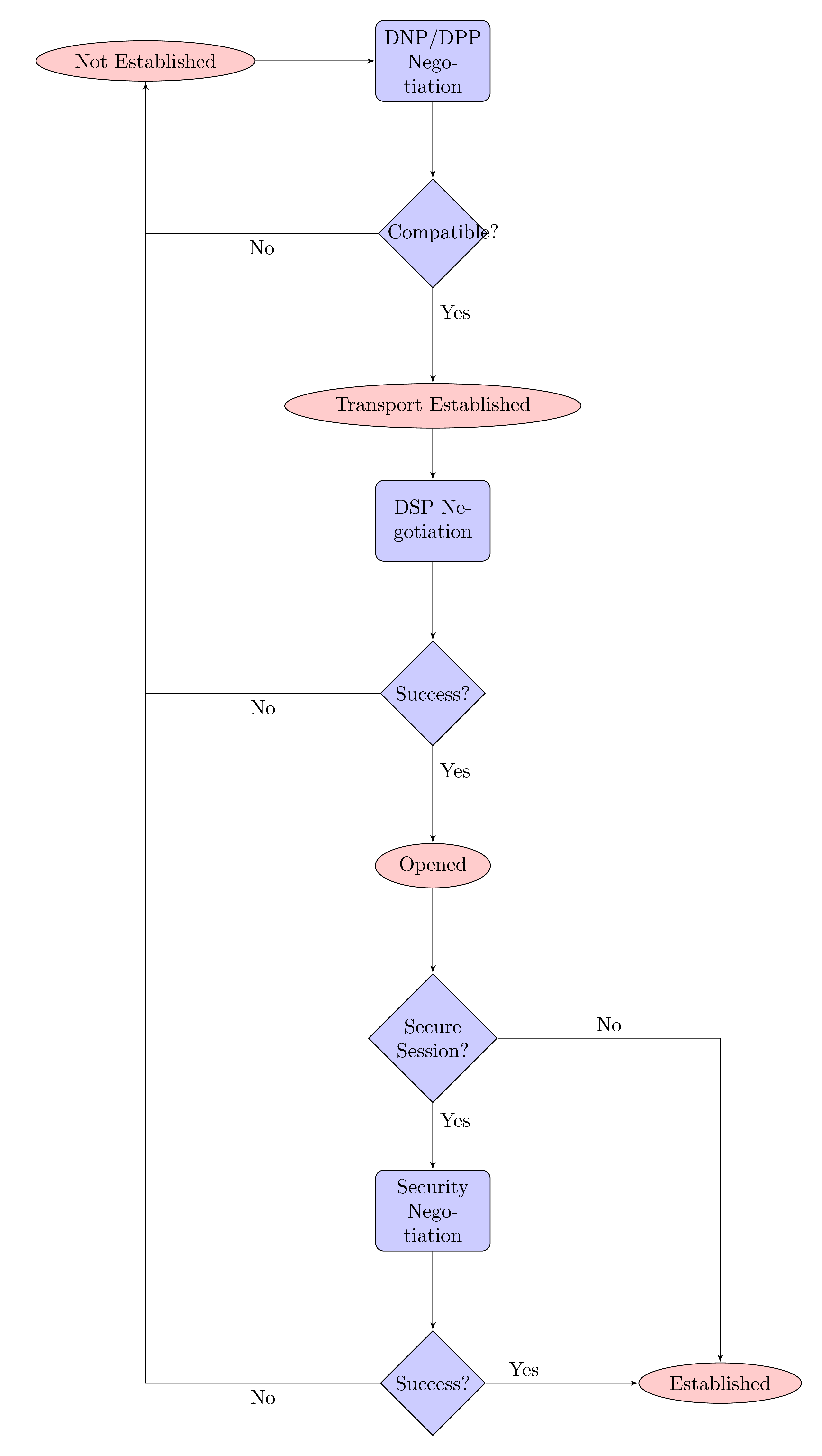

There are two phases to protocol negotiation of the DPS on a lossless session. First, the nodes negotiate DNP and DPP (simultaneously). Following that negotiation (and assuming successful negotiation) a specific application protocol, the DOF Session Protocol (DSP), is used to negotiate the application layer protocols and their options that will be used on the session. This negotiation includes the specific authentication and key distribution protocols that are required.

|

Two-node lossless transport sessions immediately negotiate protocols.

dof-2008-1-spec-9Protocol version negotiation takes place immediately after the transport-level lossless session is established and before any application PDUs are exchanged. It begins with the client sending a datagram. |

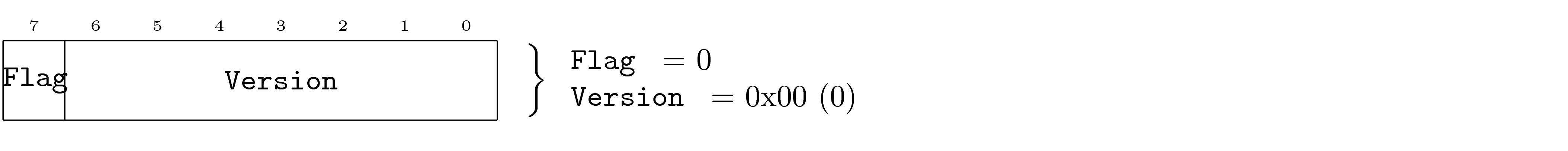

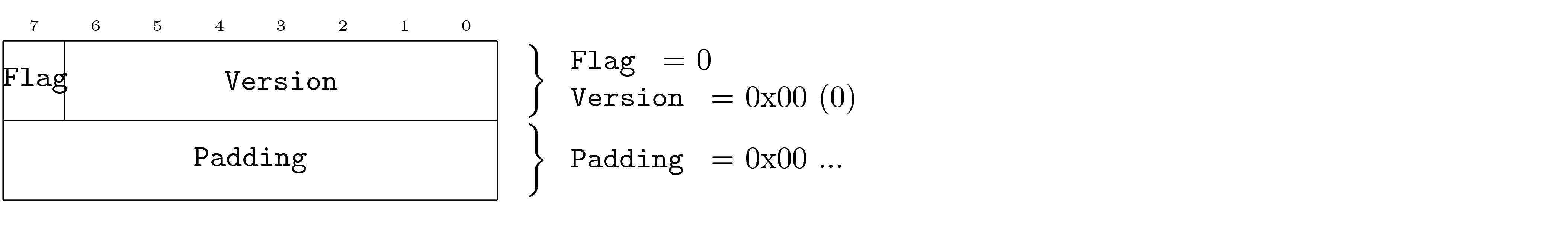

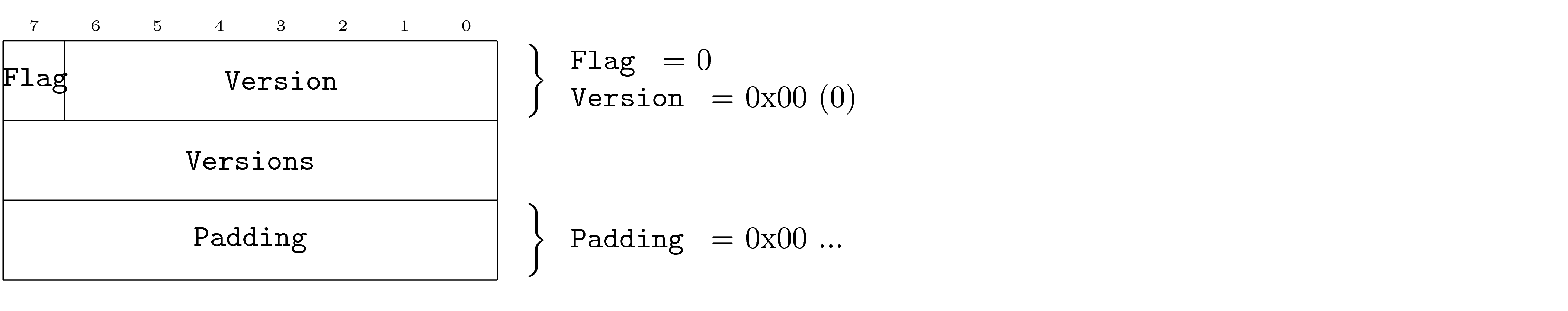

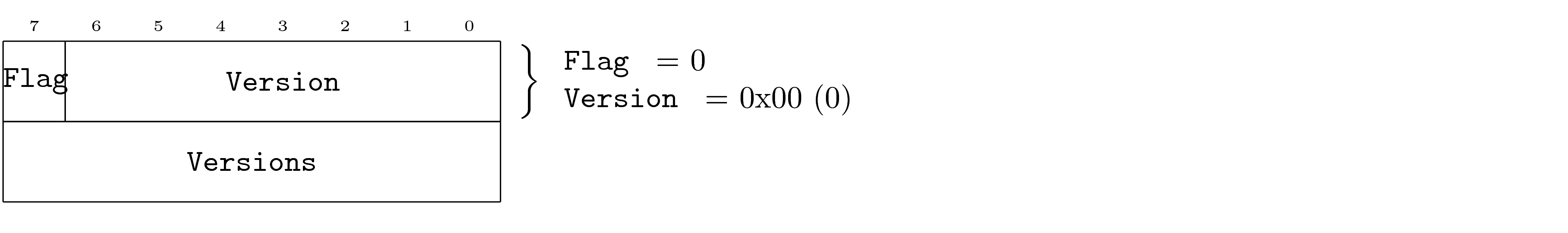

Negotiation of the protocol version begins with the client. The client identifies the DNP and DPP that it prefers (possibly the only ones it understands) by sending a two byte datagram (one byte DNP, one byte DPP) using only the protocol version bytes and omitting flag bytes, meaning that the flag bits must be clear.

|

This definition of negotiation assumes that lossless 2-node sessions use a mechanism that provides in-order guaranteed delivery. It also assumes that nodes may send any number of bytes that the other side of the session receives in a timely fashion. |

If the server can accept those protocol versions (even if it does not prefer them because they are older and less capable), it echoes a two-byte datagram using the same protocol versions, or alternatively it just begins using those protocol versions by sending a datagram that uses them. Both the client and server know that they are speaking the same versions, and the negotiation of application protocols begins (described further in the section on Application Protocols). The server knows the protocol versions because it echoed the datagram (acceptance), the client knows because it received a datagram with the same protocol versions that it sent.

|

Negotiation of version is in decreasing order of desire.

dof-2008-1-spec-10Each node must have a list of the versions of DNP and DPP that it can speak. It orders these by most desirable to least. Negotiation always begins with the most desirable, and progresses to the least desirable. |

|

Accept the first valid negotiated versions.

dof-2008-1-spec-11If a node receives a combination of versions of DNP and DPP that it can speak (meaning they are understandable), it must accept it even if it does not prefer to speak those versions (possibly because they are less optimal). |

If the server cannot understand the protocol versions requested, it responds with a two byte datagram using the protocol versions that it prefers (potentially the only ones that it understands), and the roles of client and server are reversed (for the purposes of further negotiation). This means that the old 'client' node is in the role of responding with either an acceptance, beginning to use those versions (by sending a datagram), or another 'rejection' in which case the roles are reversed yet again.

Upon receiving a two byte datagram during negotiation with protocol versions that are not understood, the response is always a two byte datagram with the next preferred protocol versions that are understood or a full datagram that uses a set of versions. This proceeds until either side has no untried protocol versions or a bad datagram (non-negotiation and using versions that are not understood), at which point the session is terminated.

It is common for devices to implement a single set of network and presentation protocol versions. It is also common for devices to initiate sessions with more powerful nodes. This means that in the typical case a client (the node sending the first negotiation bytes in the common case) speaks a single set of protocol versions. The same situation can apply to a server.

In this case where the sender speaks only a single set of protocol versions, then the first datagram sent may include the flag byte, control fields and encapsulated application data. This is 'asserting' protocol versions. This kind of assertion is always a valid response during negotiation, and the client may immediately use it.

|

The presence of an application PDU determines the difference between 'asserting' and negotiating on a session. All negotiation PDUs will lack an application PDU. All asserted PDUs would contain an application PDU. |

|

During negotiation, immediately terminate 2-node session on receipt of unknown versions.

dof-2008-1-spec-12During negotiation of a 2-node session, if the server or the client receives a non-negotiation datagram that includes protocol versions that it does not understand, the receiver must terminate the session. |

If the server speaks only a single set of protocols, it may preemptively send a full datagram with a flag byte, control fields, and application data when the transport session is established. The client would treat this as the end of negotiation. In the case that the client cannot speak those versions, the client terminates the session. If the client had also asserted and the versions did not match then the client and server would both terminate the session. This is correct behavior because an assertion is only possible if the node understands only a single set of versions. Any mismatch of asserted versions means communication is not possible.

|

During negotiation, when a node reaches the last sets of versions it understands, it is optimal to assert those versions. For example, a node that speaks A1, B1 and A1, B2 may send A1, B2 as a negotiation datagram. Assuming that the other side continues negotiation (implying that those versions are not understood), then the node can continue to A1, B1. However, in this case if the other node tries to continue negotiation there are no more versions to try and the node will terminate the session. Asserting A1, B1 at that point saves the node from continuing to negotiate when it is pointless. |

|

DNP/DPP version negotiation must complete within 10 seconds.

dof-2008-1-spec-13Measured from when the client establishes the transport session, if the stack cannot converge to an agreed set of network and presentation protocols within ten (10) seconds then the DPS session must terminate. Convergence is the time at which the transport client sends its first non-negotiation datagram. |

4.1.5. Transport Addresses

Throughout the DPS documents, several references to transport addresses exist. The use of this term 'transport address' means a unique identification of the source of some communication on a specific transport. It also identifies an individual target on a transport.

Different transports may use similar logical addresses. There can also be multiple interfaces speaking the same protocol with identical addresses. The stack should consider all these cases as different transport addresses.

There are transports that lack a well-defined sense of addresses, or where implementations make it necessary to add additional data in order to distinguish addresses. To resolve this problem the DPS adds its own sense of a logical address, called an DNP (DOF Networking Protocol) address. It is important to understand its relationship between DNP addresses and transport addresses.

|

Correctly distinguish transport addresses.

dof-2008-1-spec-14Implementations must track the source of communication such that it can send responses back to the sender. This source (and response destination) is called the ''transport address,'' even though it will likely need to contain additional information beyond the actual transport logical address. Implementations must permit DNP addresses, even if the transport itself provides unique addresses. |

In the case of sessions, the notion of 'transport address' really relates to the session and not just the transport. For example, it is possible to have both a UDP datagram session and a TCP streaming session that involves the same 'transport address.' In this case, the 'transport' is also part of the 'transport address,' even though in both cases the underlying transport is IP.

This distinction is critical because many stack issues relate to the session, and not directly to the 'transport address' when used in the more limited sense.

4.1.6. Loopback Prevention

A common issue with broadcast and multicast transports is receiving datagrams that the node sent, either because of operating system problems or because of network echoes. In order to prevent each layer of the DOF Protocol Stack from needing to address this issue the transport layer of the DPS must ignore these datagrams.

The transport (referring to the layer directly below the DNP) is uniquely able to remove these echoes because only it is aware of the specific meaning of transport addresses. It also knows (or can know) the different types of sessions and servers that are in use, and whether it is possible for loopback to occur.

In addition, if a transport is not able to determine on its own whether loopback will occur, there is a specific DNP version (127, described later) which a transport implementation can use to determine if loopback is occurring.

|

DPS transports must correctly reject loopback datagrams sent from the same application.

dof-2008-1-spec-15As described, all DPS transport implementations must correctly reject datagrams that originate from the same application. Application protocols may rely on the fact that they will not receive loopback datagrams. |

Stated another way, all DPS transport implementations must reject inbound multicast or broadcast datagrams that sent by the receiving application. Pay particular attention to the wording – datagrams from the same application must be rejected, not the same node.

In general, this means that implementations must compare the source information (for example, host, and port for UDP) for inbound datagrams with the source information for each session for the receiving application. If the source information matches, then the receiver drops datagram. If such a comparison is not possible or may not result in precisely correct results then the implementation may use DNP version 127 to send a datagram and watch for loopback.

As a further example, two applications running on the same node may be receiving multicast datagrams. Both applications hear datagrams sent from the first application, but only the first application drops them. The same is true for the second application and its datagrams.

4.1.7. Invalid PDU Handling

Receiving any invalid PDU signals either a corrupt communication channel, loss of state, or a possible attack on the protocol. Invalid PDUs include things like invalid op-codes, malformed flags or other fields, PDU under runs and over runs during parsing, or other structural problems. Invalid PDUs do not include cases where the structure and references are correct, but the operation fails to execute for other reasons.

Throughout the entire DOF Protocol Stack and all related application protocols, the requirements for handling invalid PDUs are identical. The primary consideration is maintaining correct session state (both transport and DPS). If session state cannot be ensured, then the session (whether transport or DPS) must be closed.

For session 'none' state management is not a concern, because there is no DPS session state by definition. However, there is still transport state to manage. For example, streaming protocols like TCP may not be able to identify PDU boundaries without state. If this state is confused by an invalid PDU then the transport session state is lost.

In most cases, datagram PDUs can be dropped without loss of state, while streaming PDUs cannot be dropped without loss of state. Streaming sessions usually have an in-order, guaranteed delivery feature that is leveraged to minimize state transferred in each PDU (for example, packet sequence numbers for security). In these cases, dropping PDUs will affect the state and must therefore result in the session being closed.

|

Correctly handle invalid PDUs, dropping sessions when correct state cannot be ensured.

dof-2008-1-spec-16As described, all DPS transport implementations must correctly reject datagrams that originate from the same application. Application protocols may rely on the fact that they will not receive loopback datagrams. |

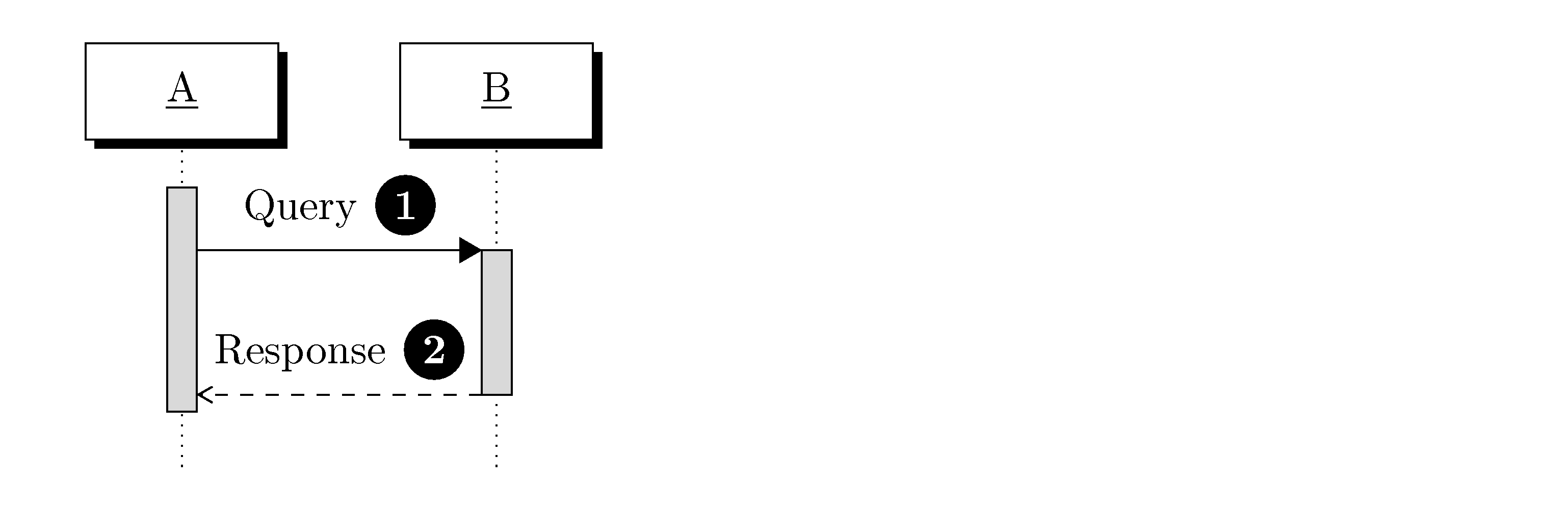

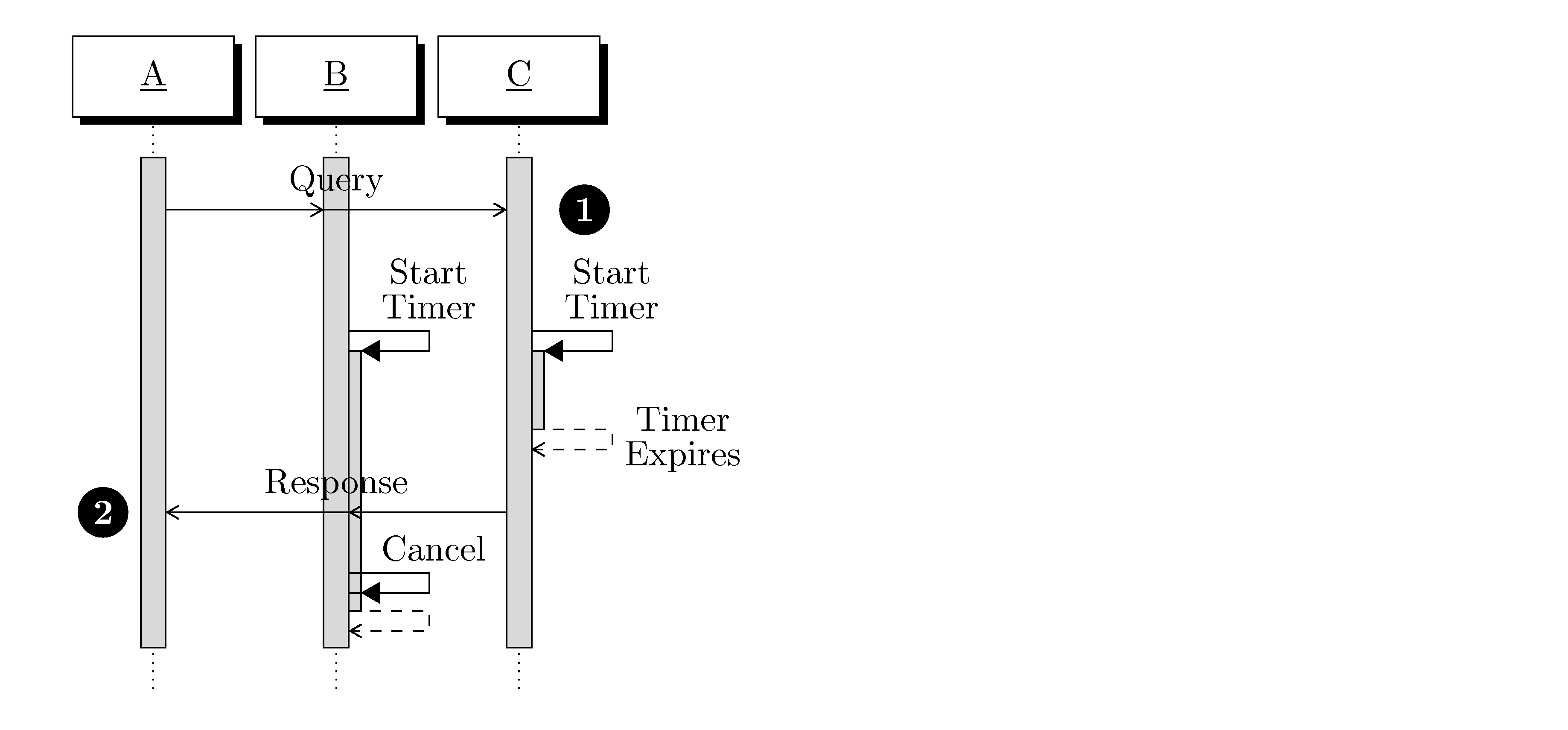

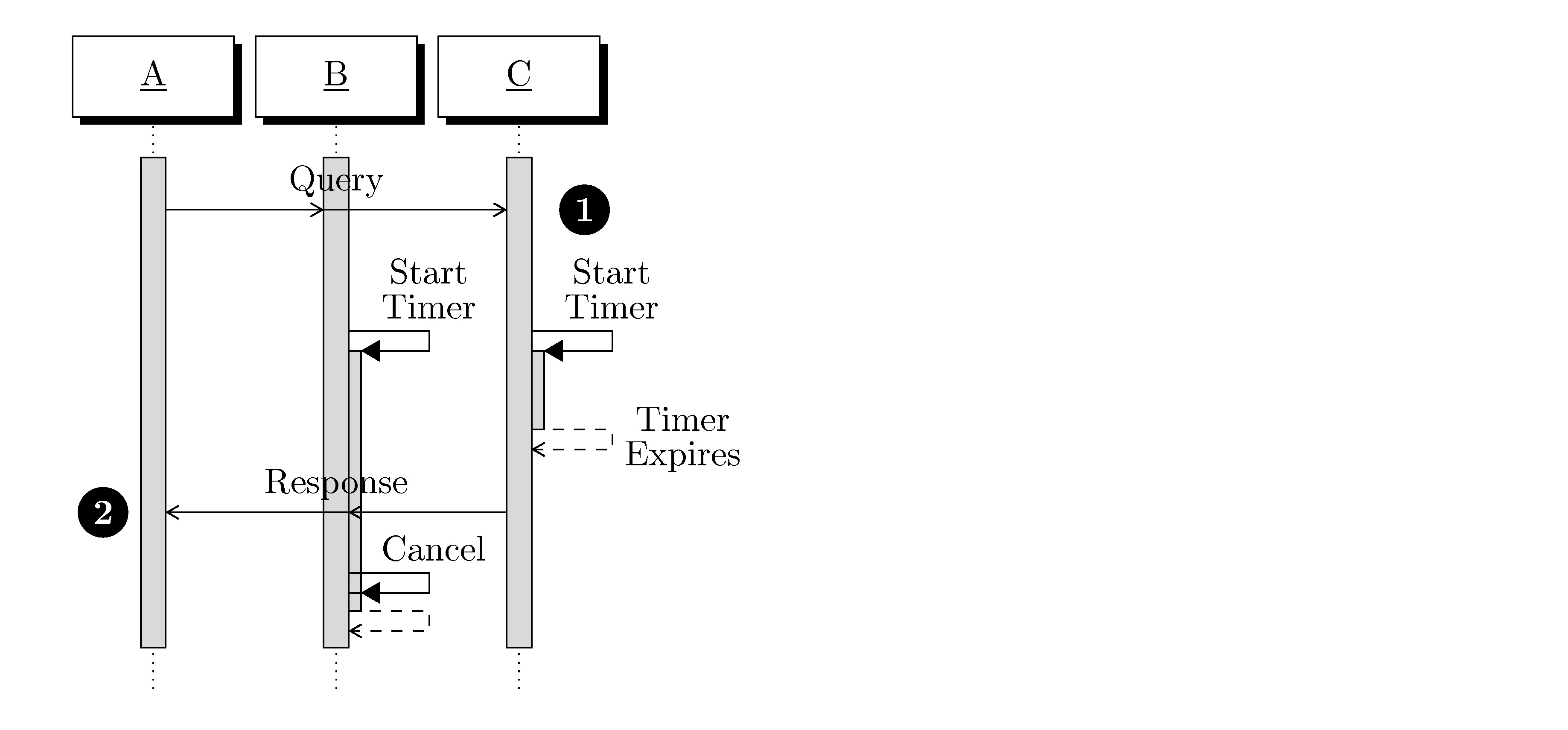

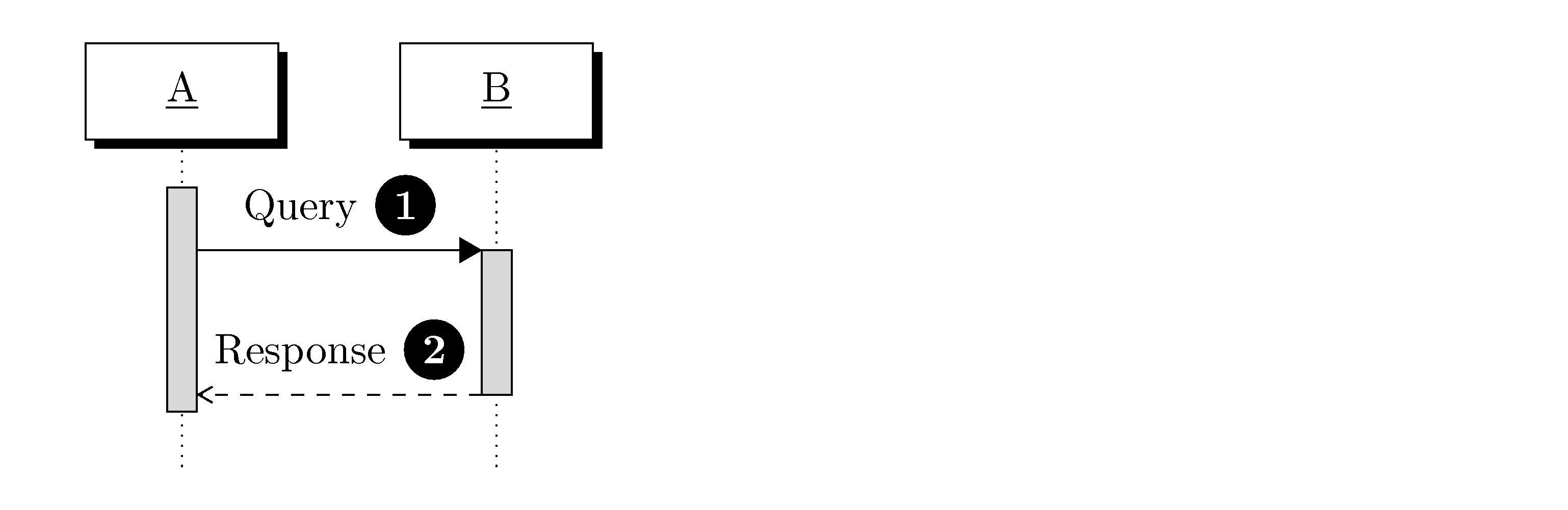

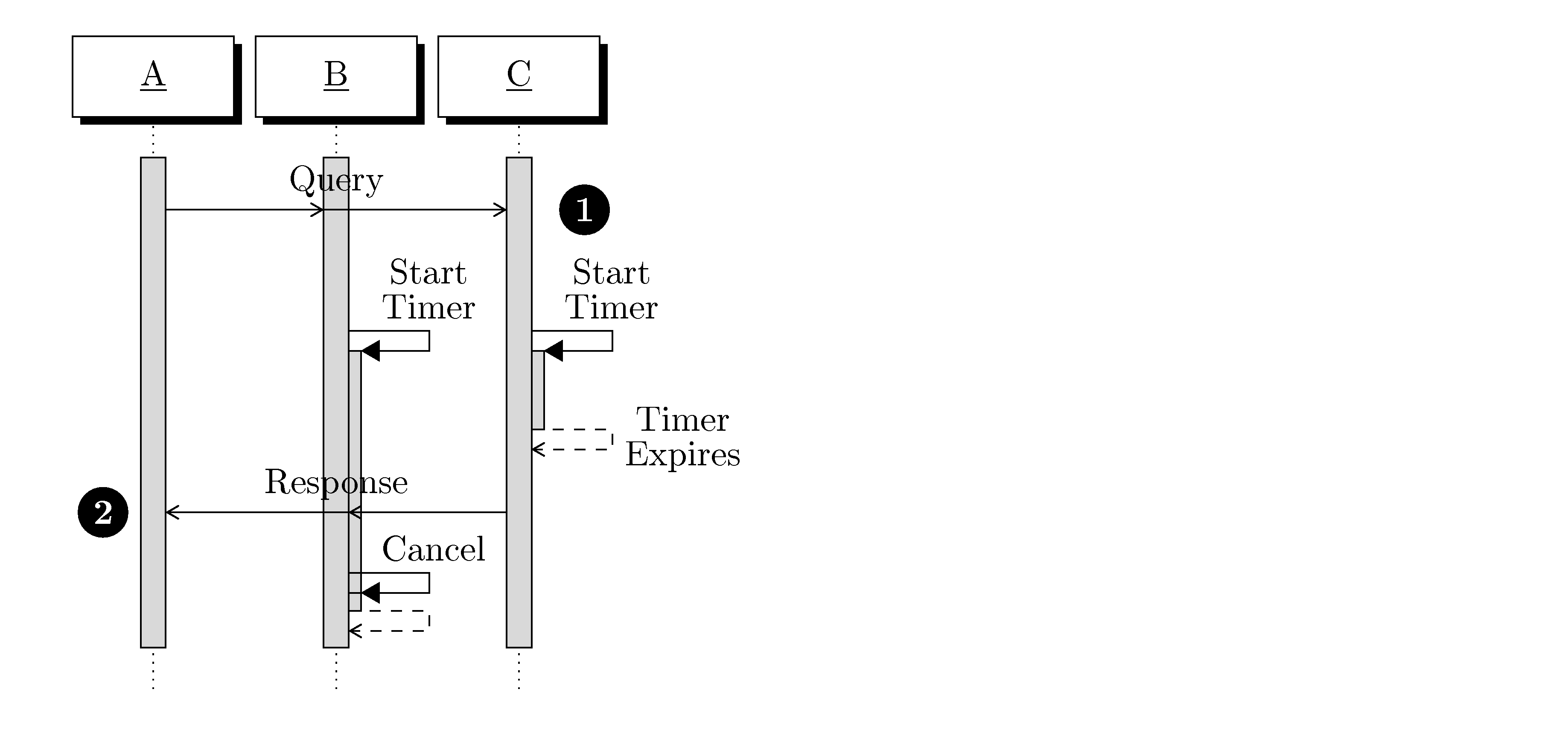

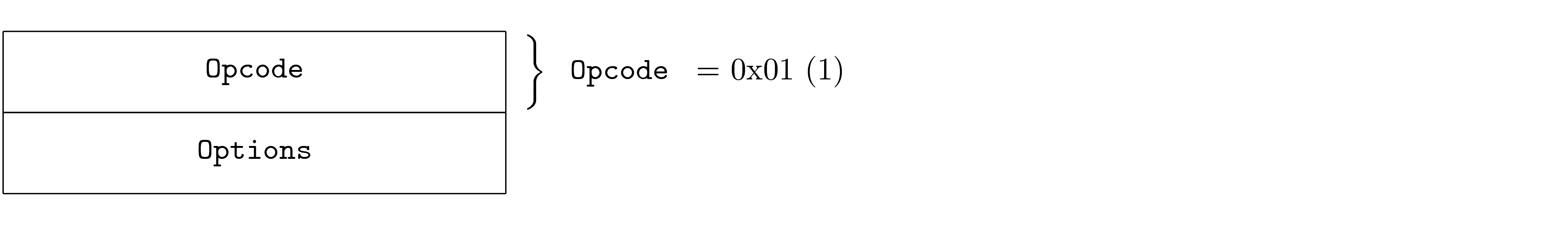

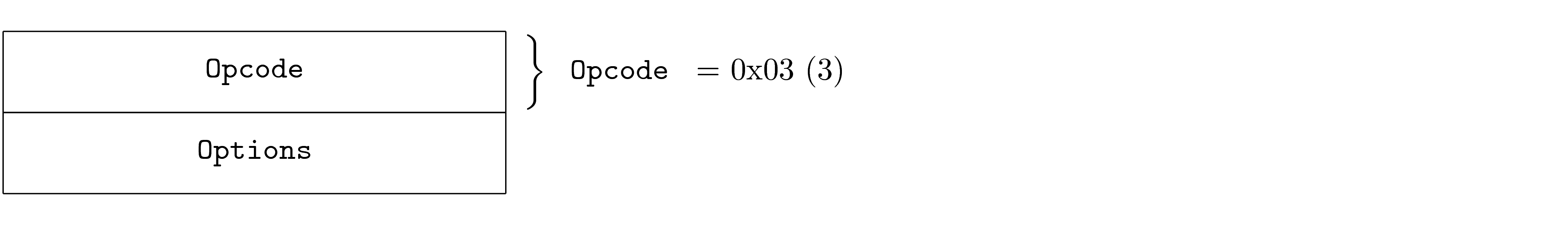

4.2. Operations